Generative AI alters the physics of content on the internet. While before an image or a text post could only be understood by computers when it was crossreferenced against thousands of platform-specific user interactions with it, now generative models are able to build these kinds of semantic connections directly from the media itself. This means people can now search, discover, and transform digital media outside the confines of any walled garden. Enter The Vibe as the core primitive for a post-platform web, defining a standardized interface between media curated by users and Generative AI.

CASE 1

Vibe-based Computing

A New Way of Being, Online

Vibes are everywhere online. Their form uniquely matches the pace and tenor of the web: continuous, social, ever-evolving. But with the advent of Generative AI, a “vibe” can take on new meaning in cyberspace.

The way generative models intuitively work is by “finding the vibe” of media they are prompted on, and translating it into the generated media they output. This metaphor lays the foundation for The Vibe as a new UI object and language that opens up unexplored territory for developers to build on top of these models. Vibe-based Computing develops a novel mode of Human-Computer Interaction between internet users and AI, with The Vibe as its central primitive.

From Platforms to Vibes

At its most basic, a Vibe is an object that can hold any number and any kind of digital content. You can have a Vibe of your vacation photos, or a Vibe of longevity research papers, or a Vibe of all the tweets you've ever written. But while a Vibe begins its life with the media uploaded by its owner, it becomes richer in detail and sophistication over time as it builds a deeper understanding of its contents in concert with AI.

Dynamic Media Ontology

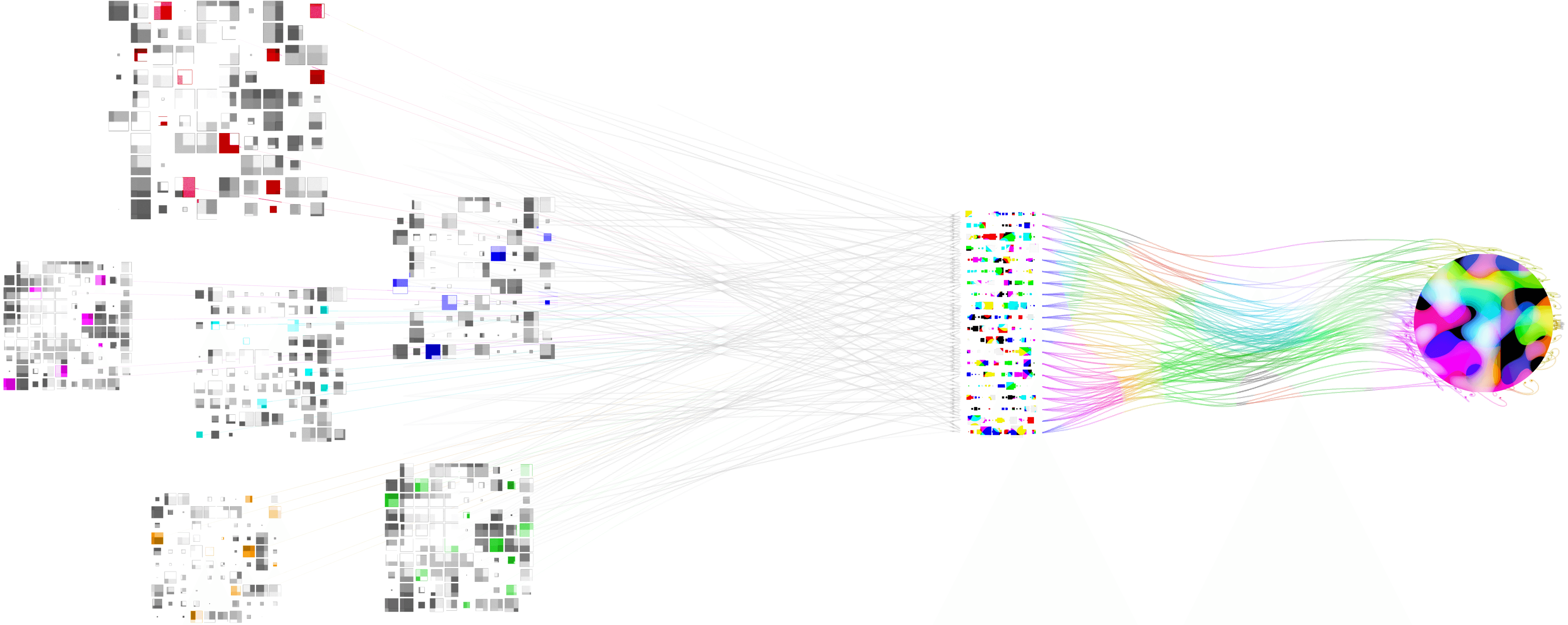

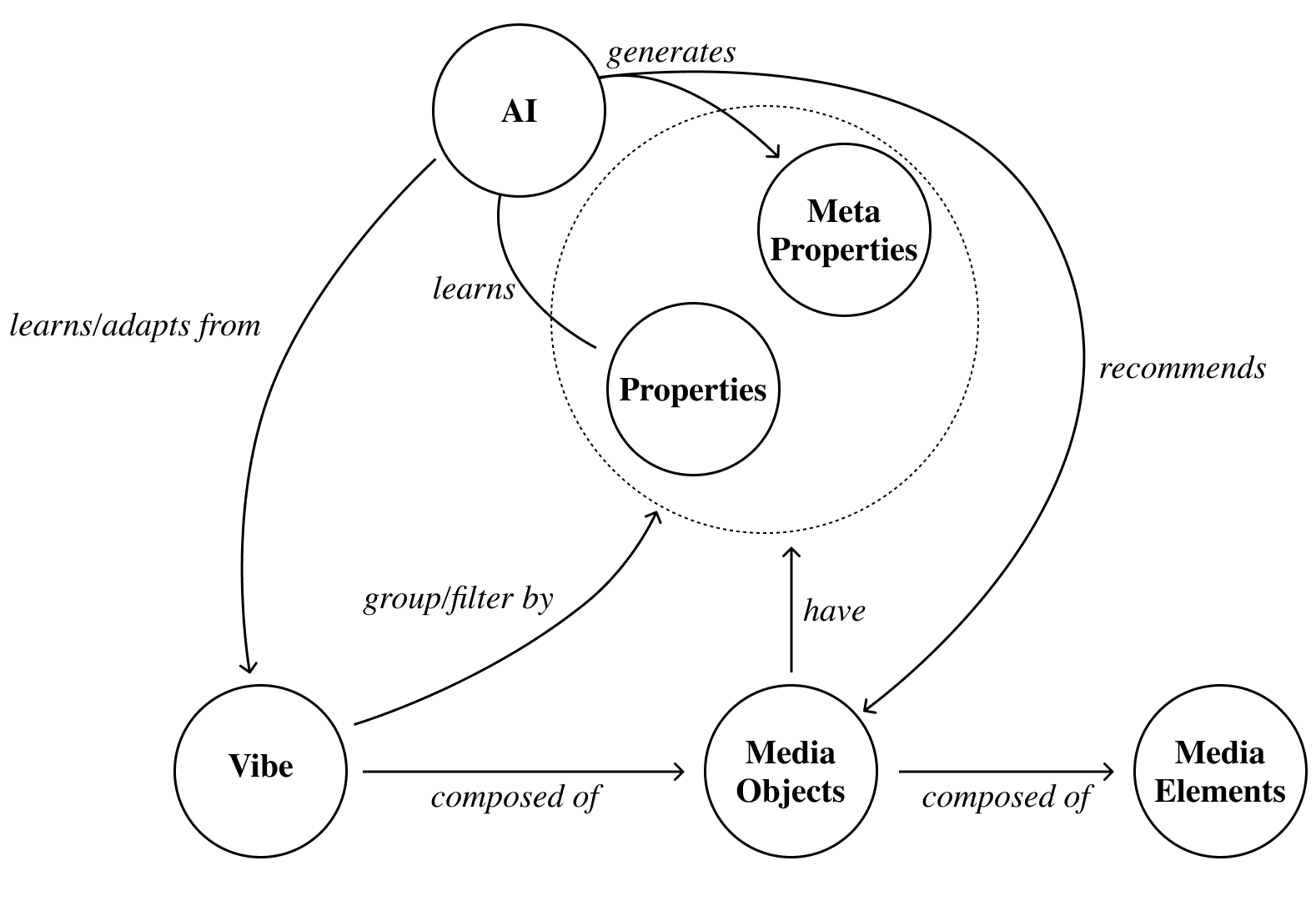

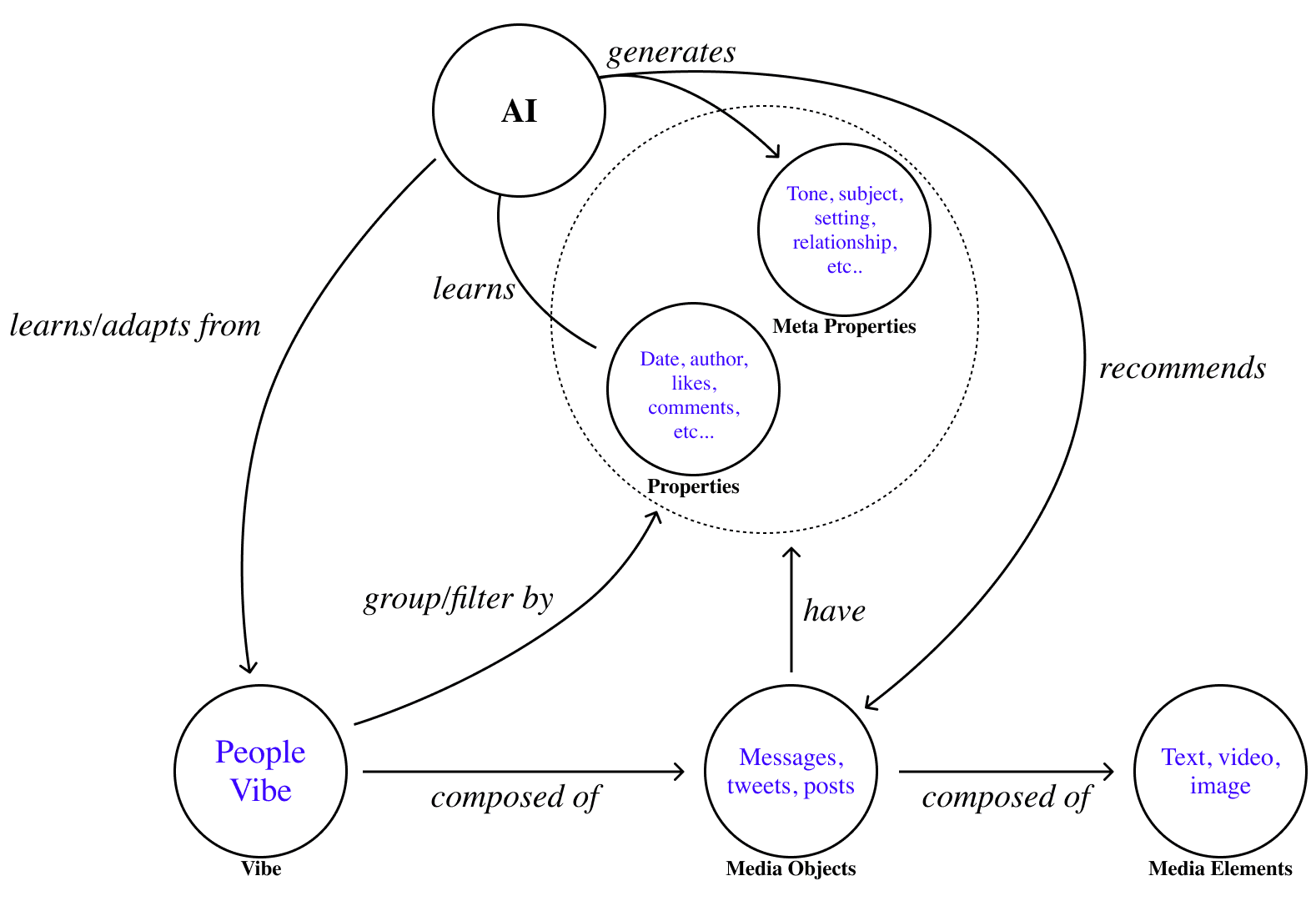

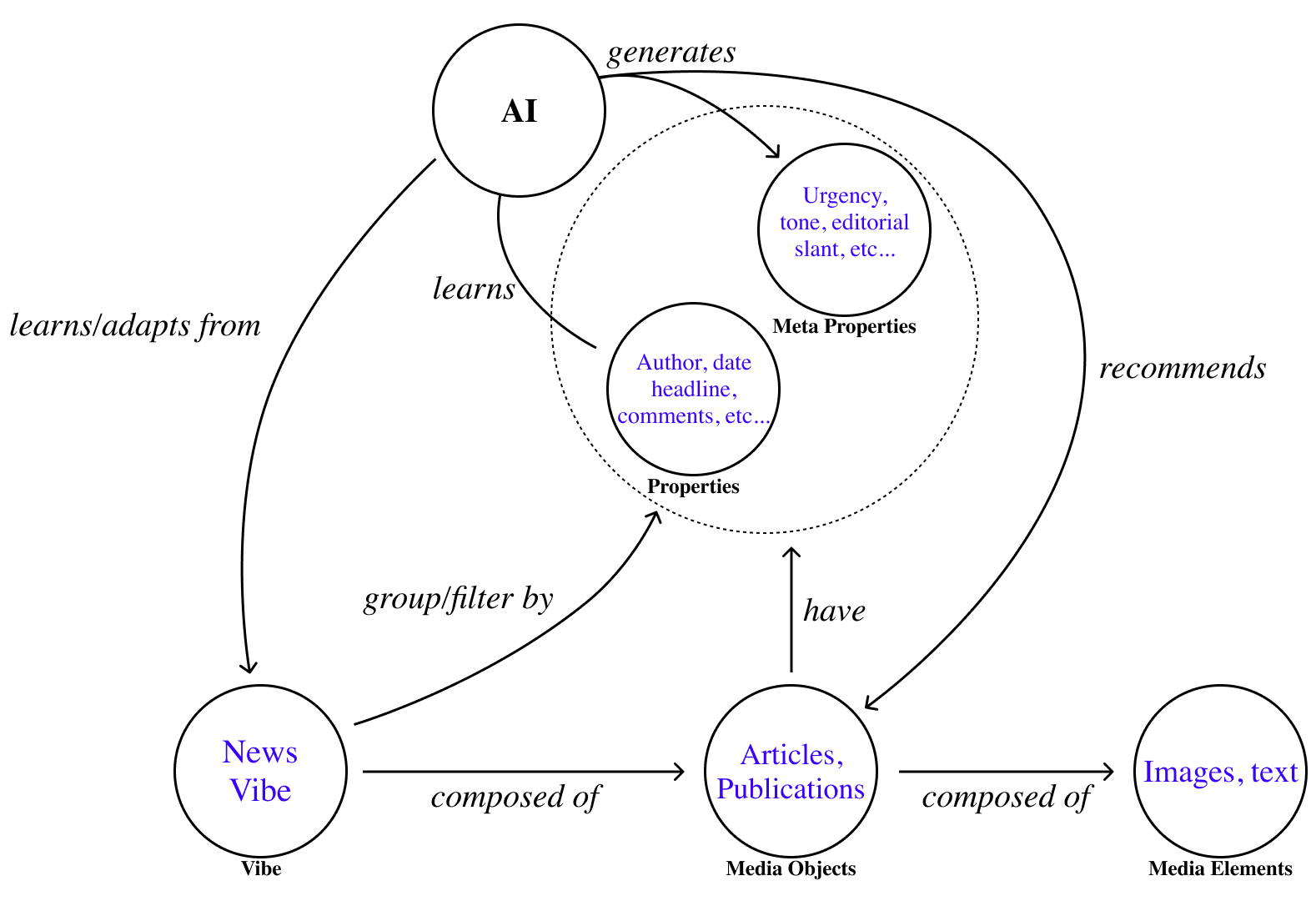

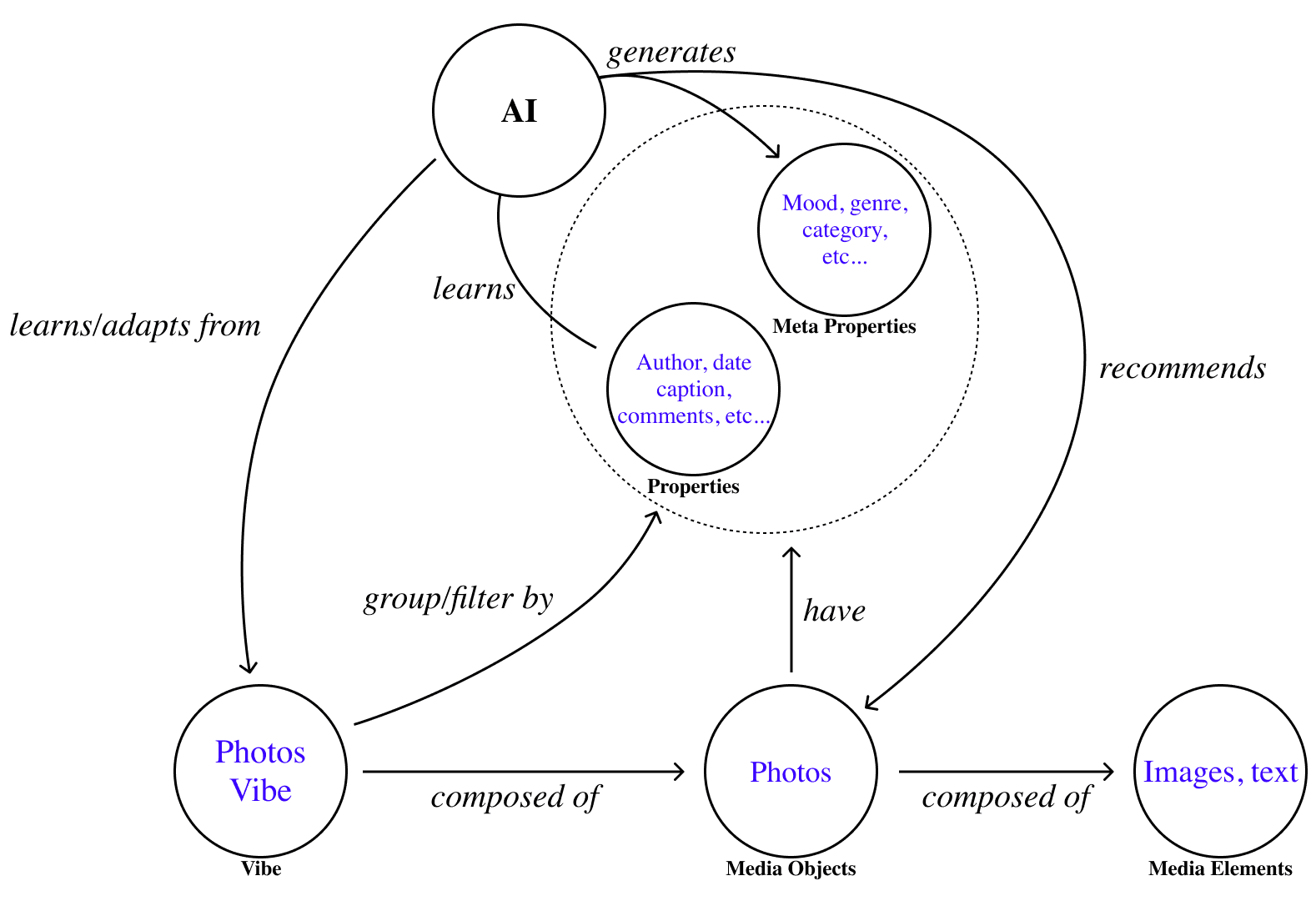

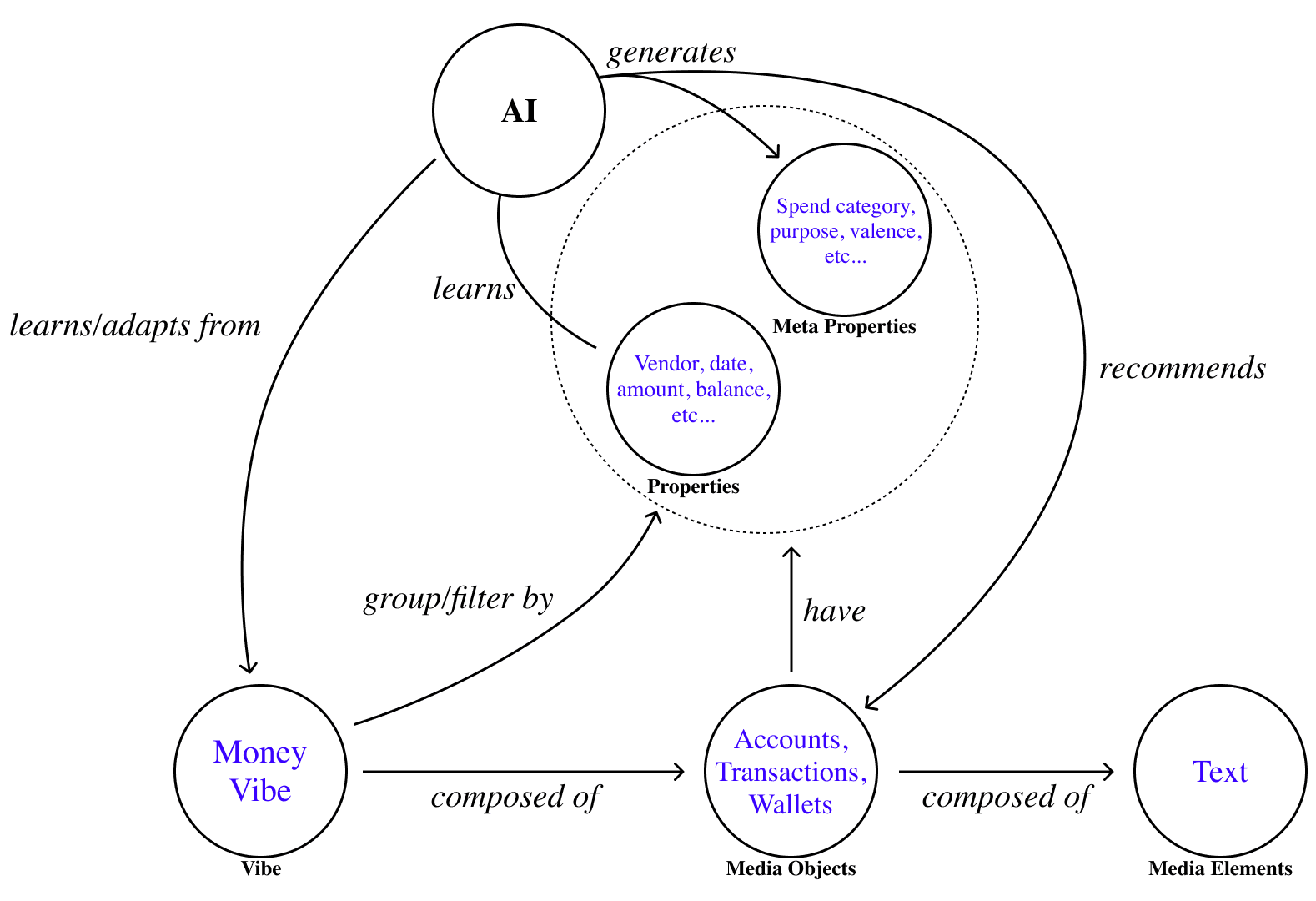

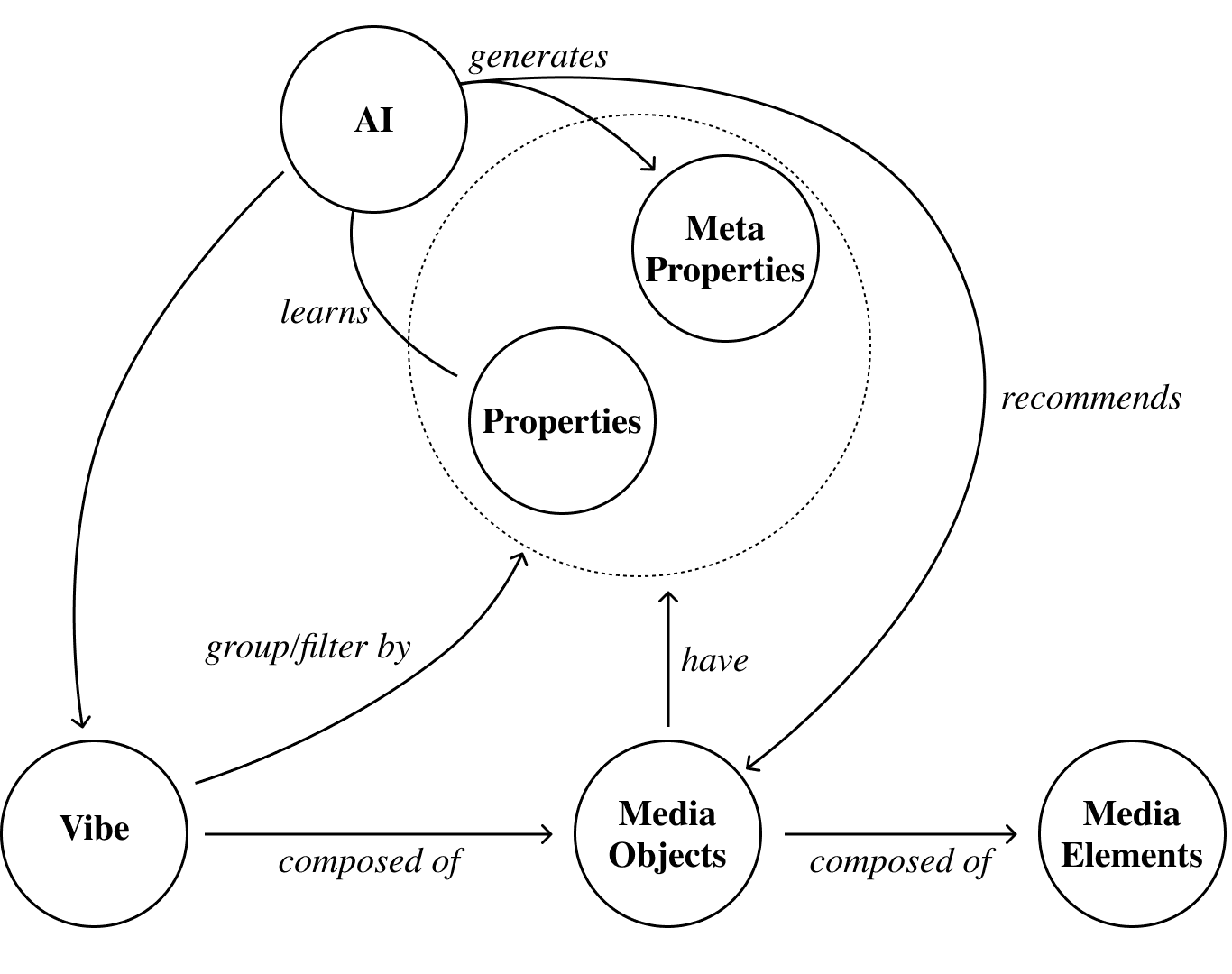

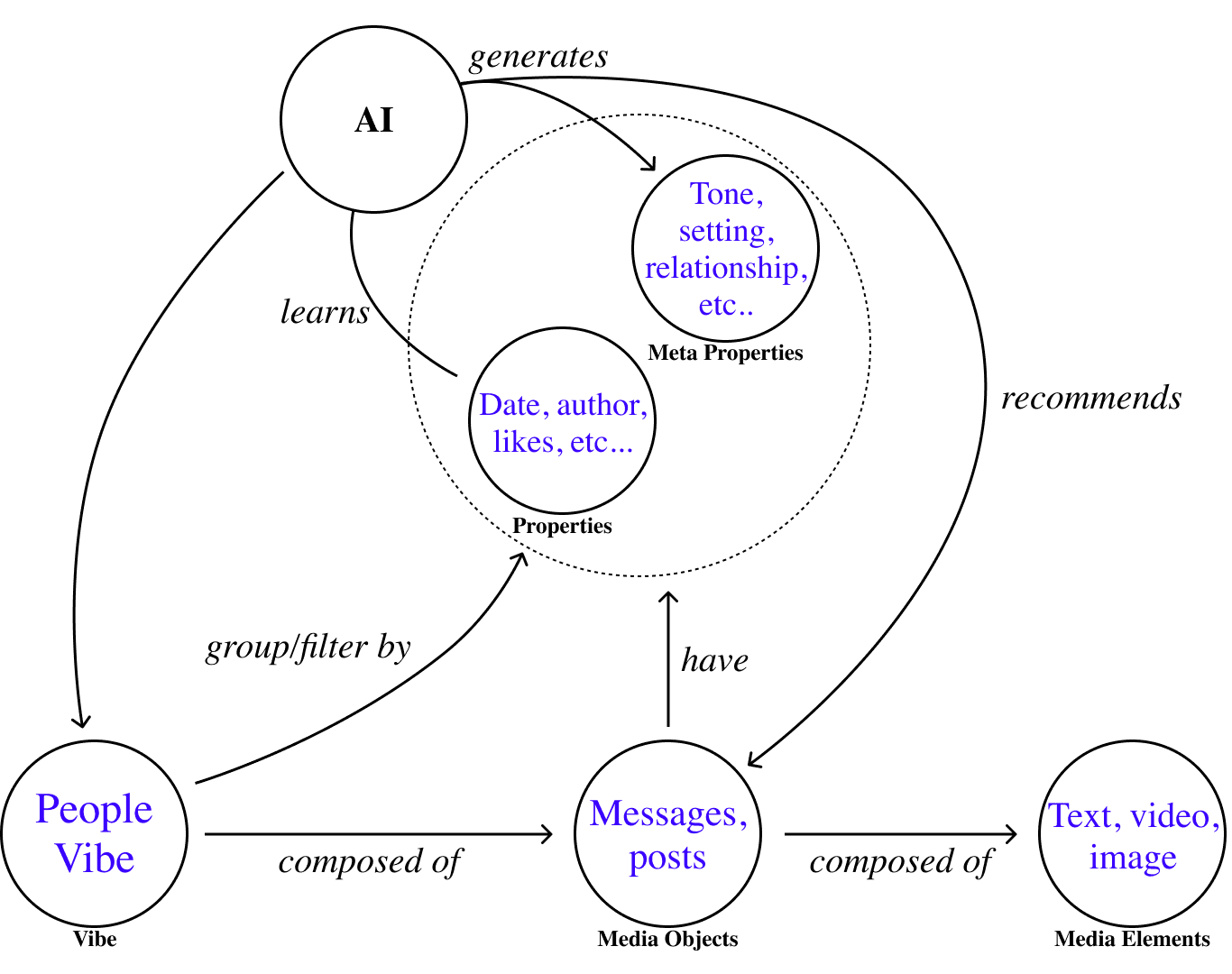

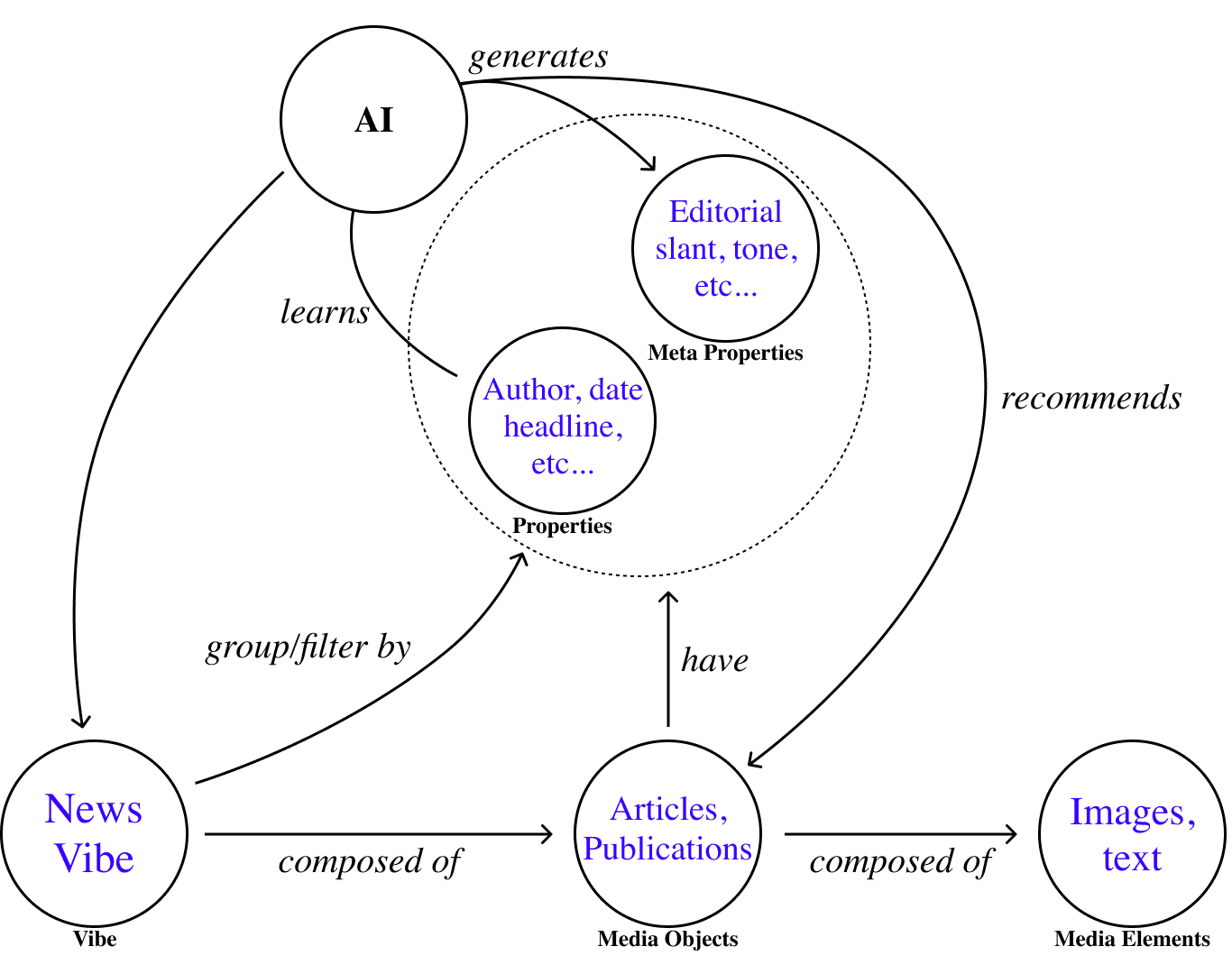

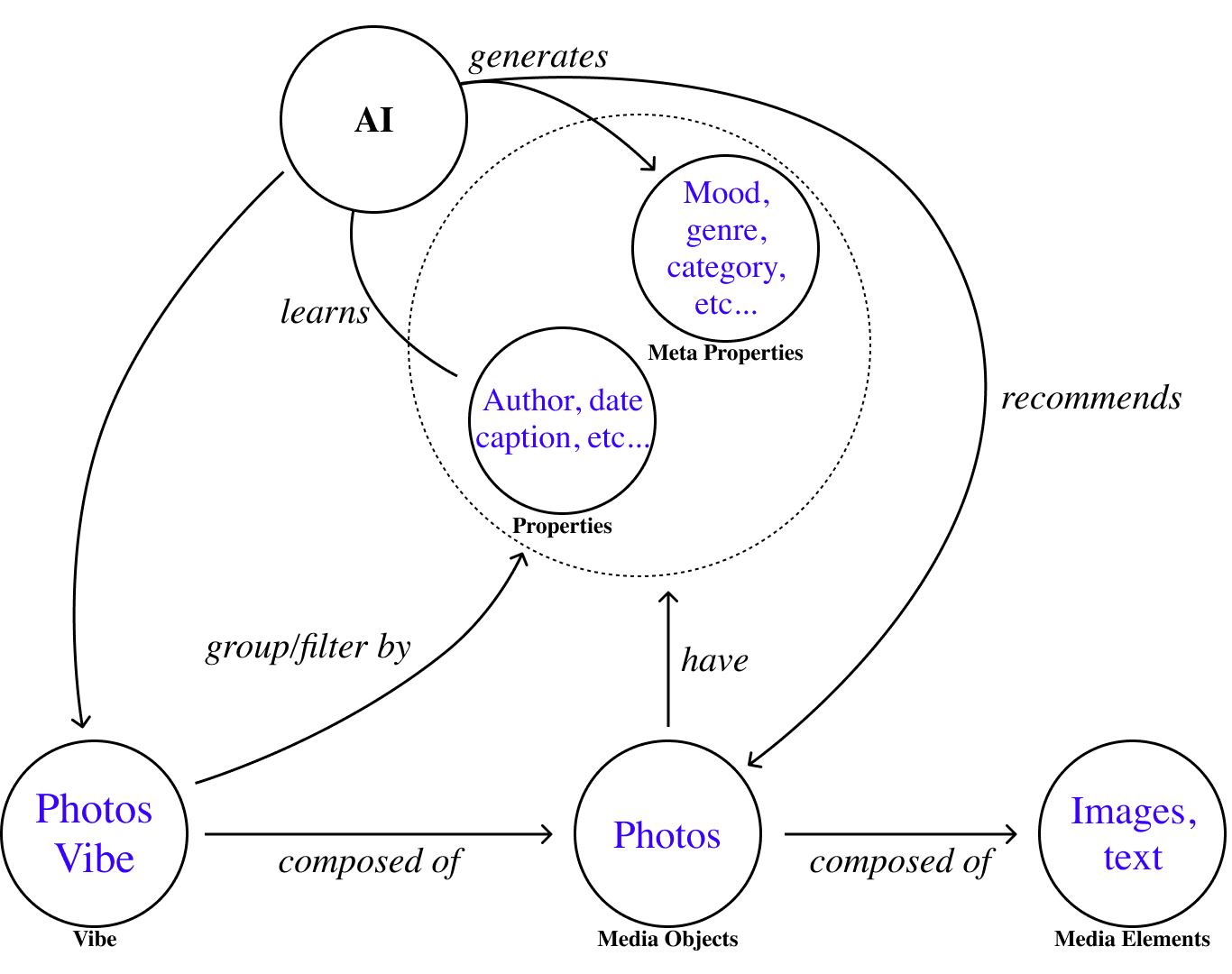

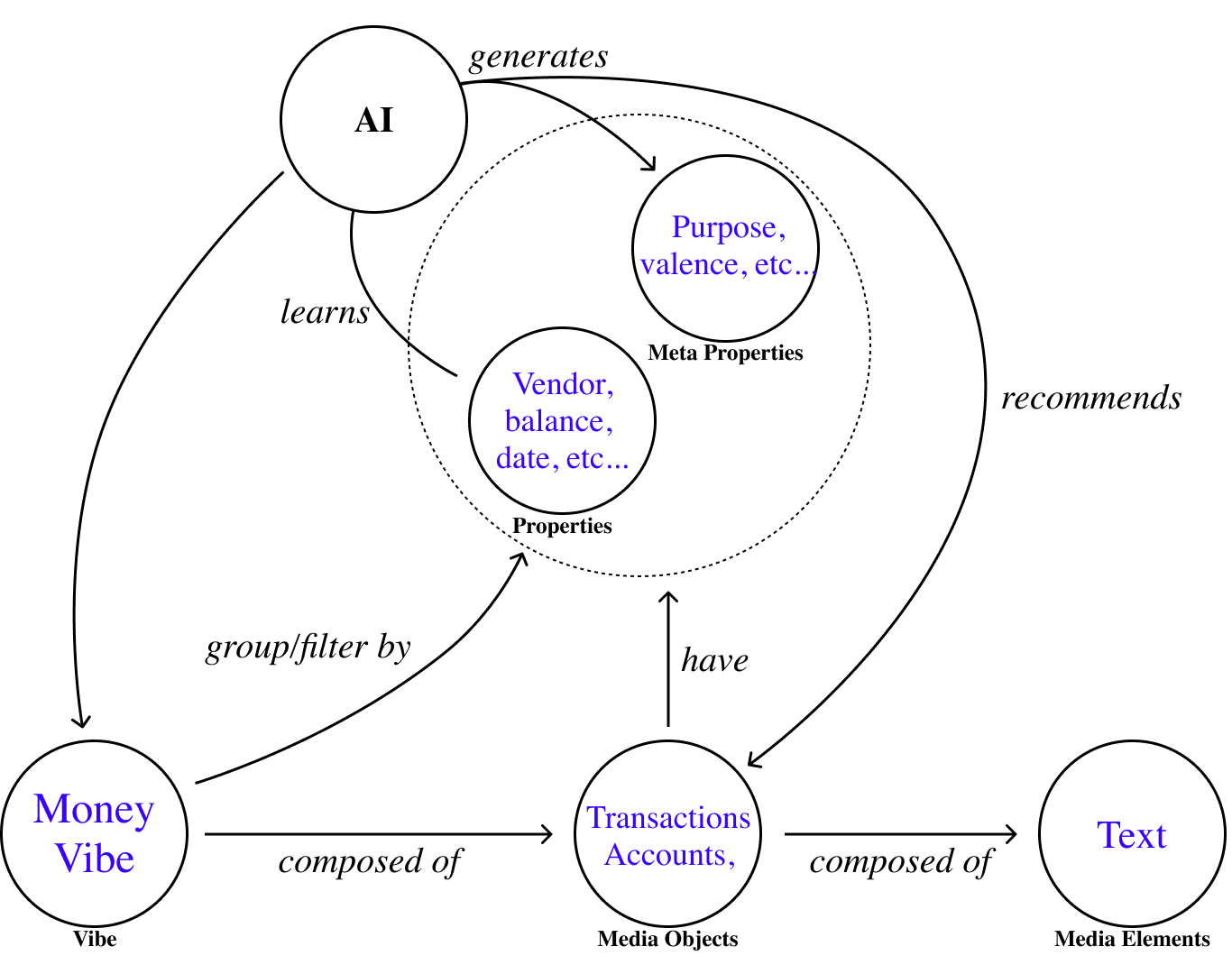

In order to implement The Vibe, we must build a model of the web flexible enough to describe all of the activity and creation that happens within it. To accomplish this, Vibe-based Computing defines a general ontology of online media:

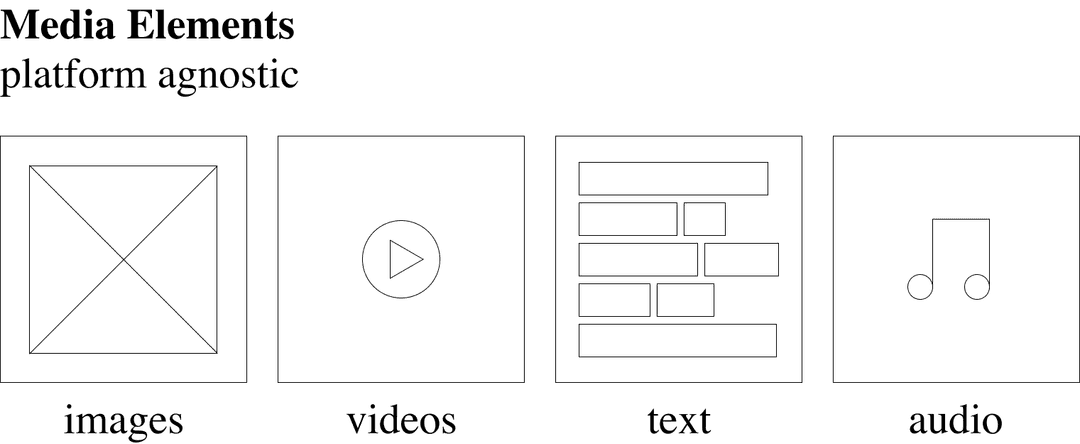

To begin, we define the base Media Elements that make up digital content: images, video, text, audio.

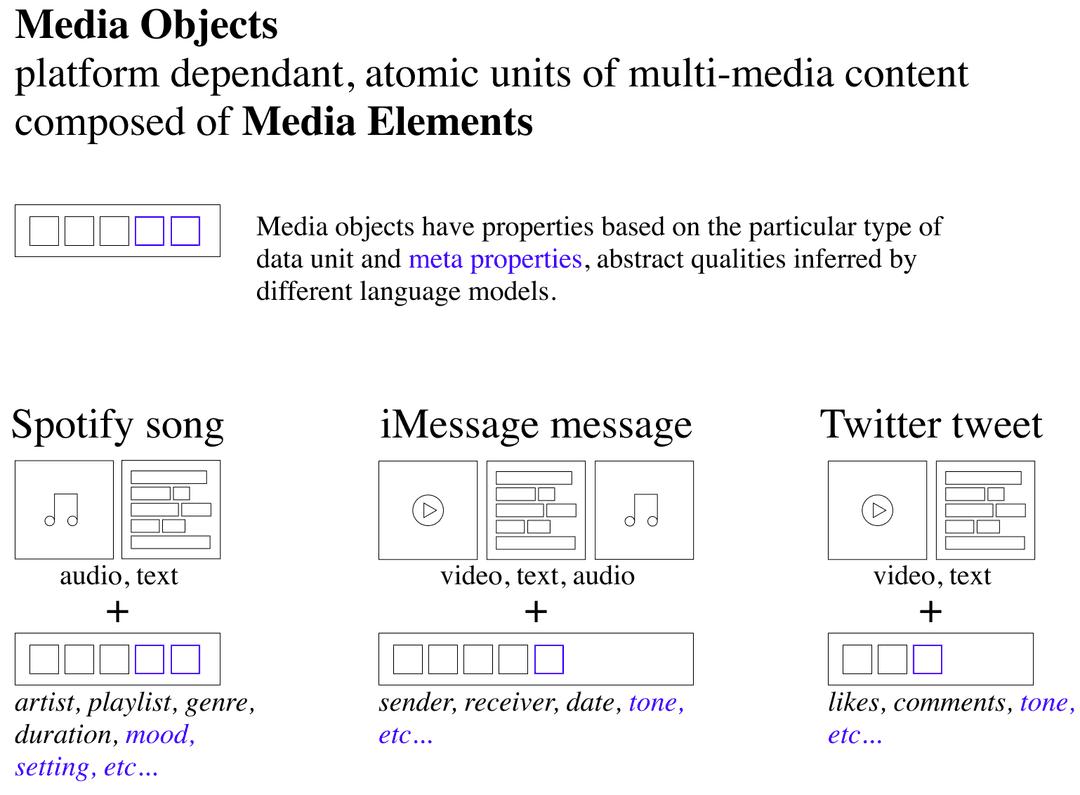

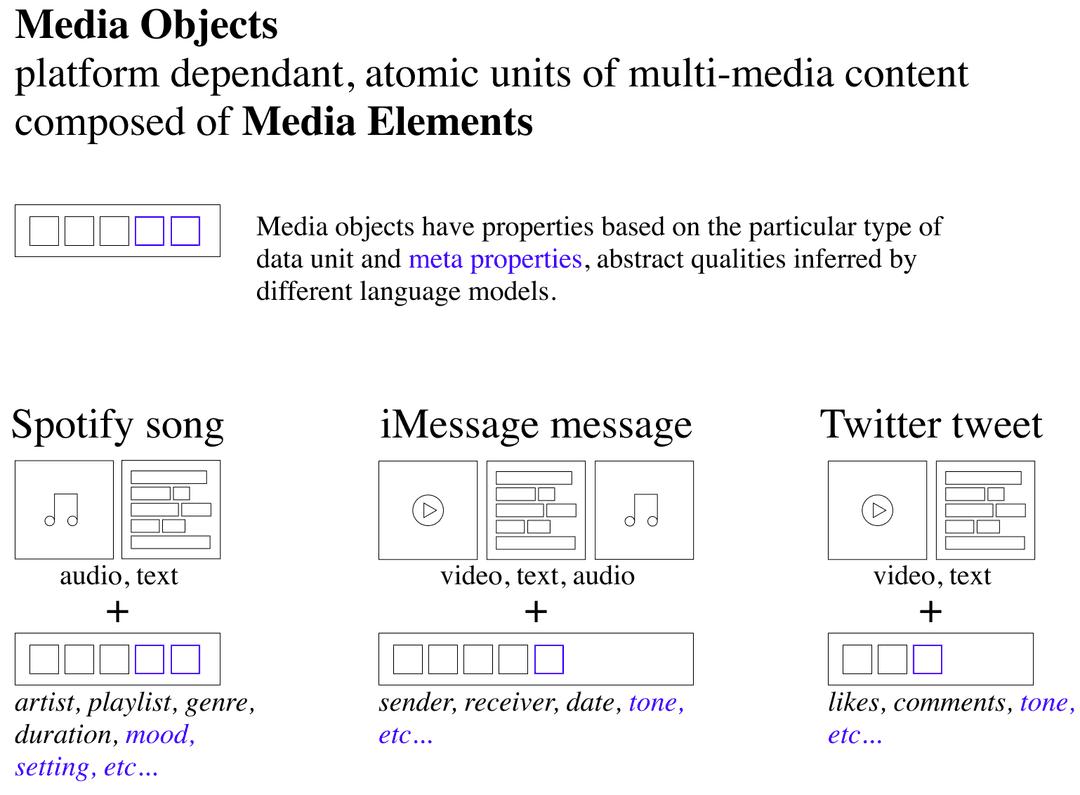

Next we define Media Objects as the core units of different platforms on the web. Media Objects consist of a group of Media Elements, alongside a set of static properties that are defined by the platform's database schema, and abstract meta-properties that can be inferred by AI.

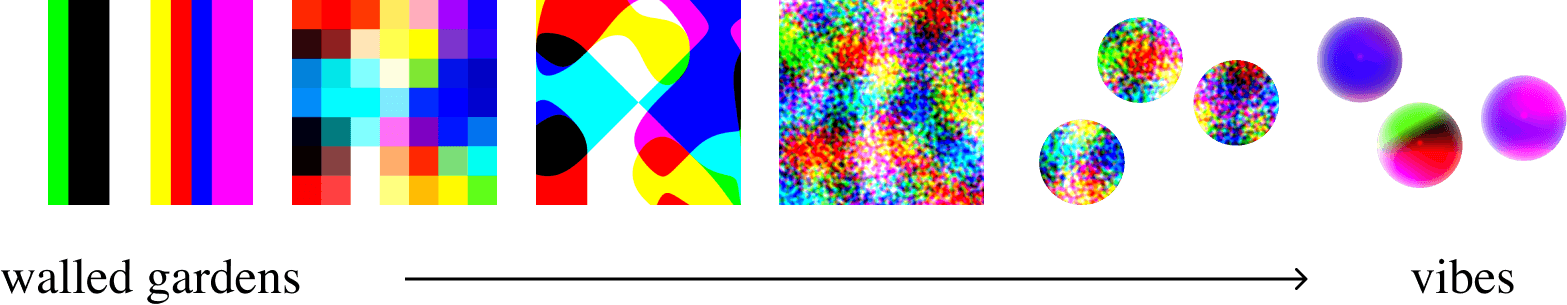

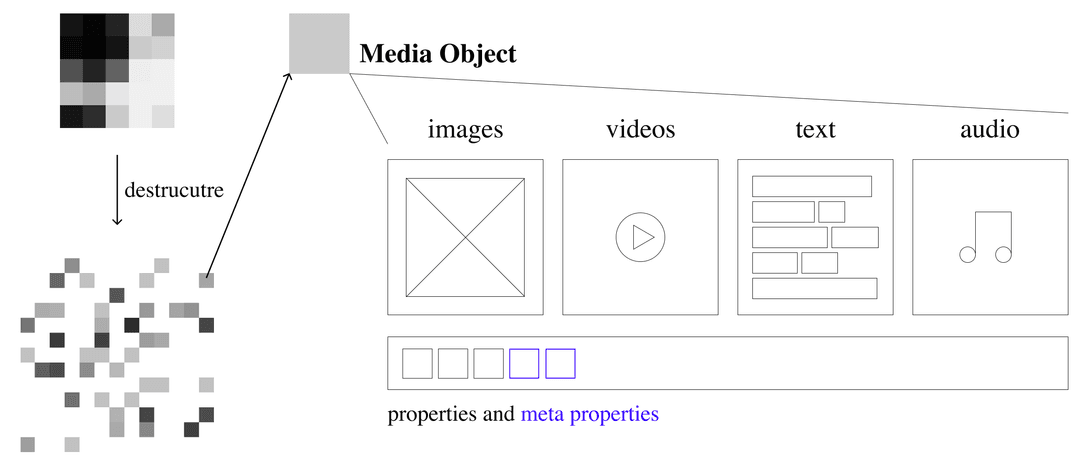

By conceptualizing the content we see on the internet in terms of distinct Media Objects, we can begin to destructure the walled gardens behind every platform into their constituent parts.

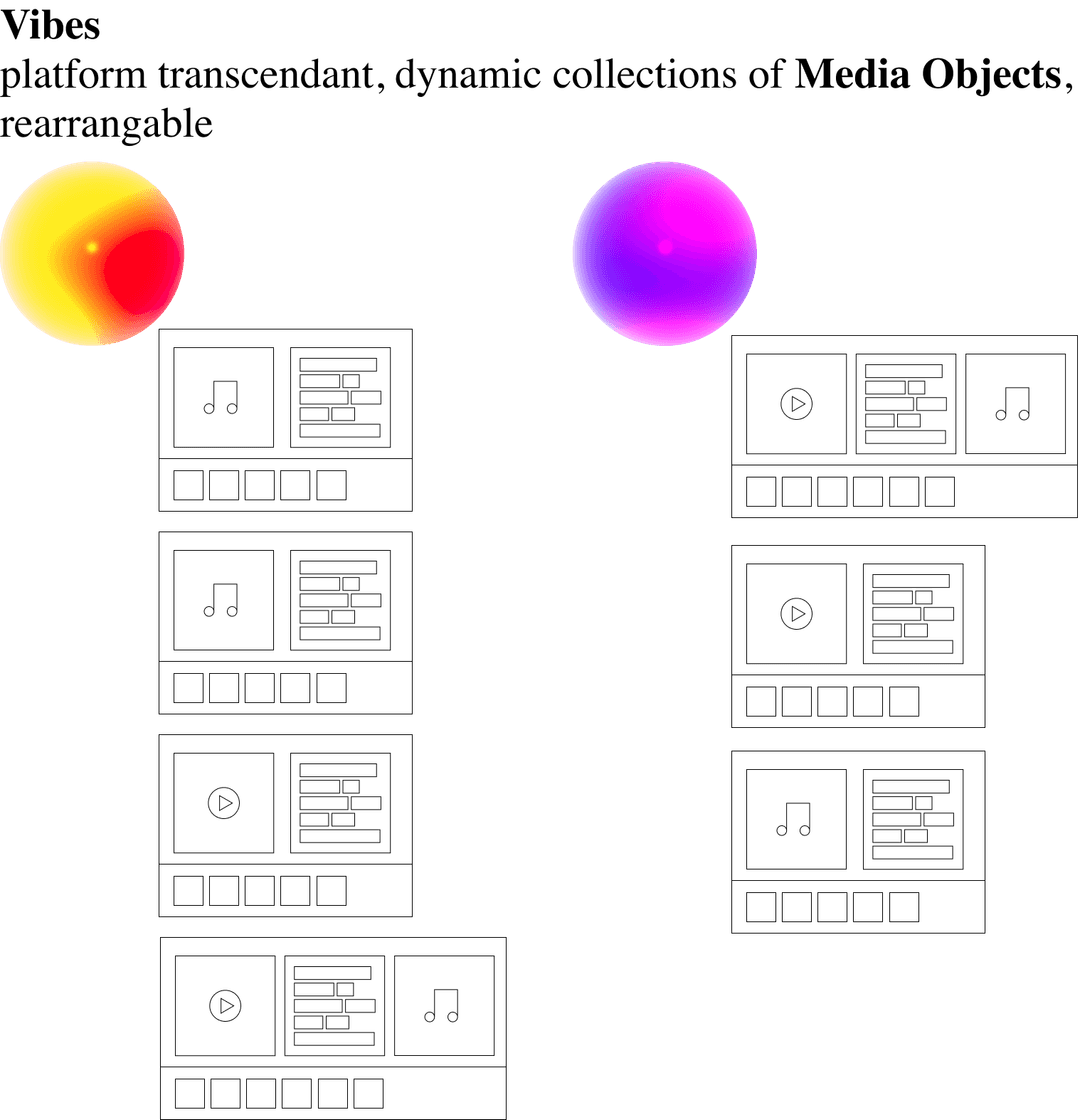

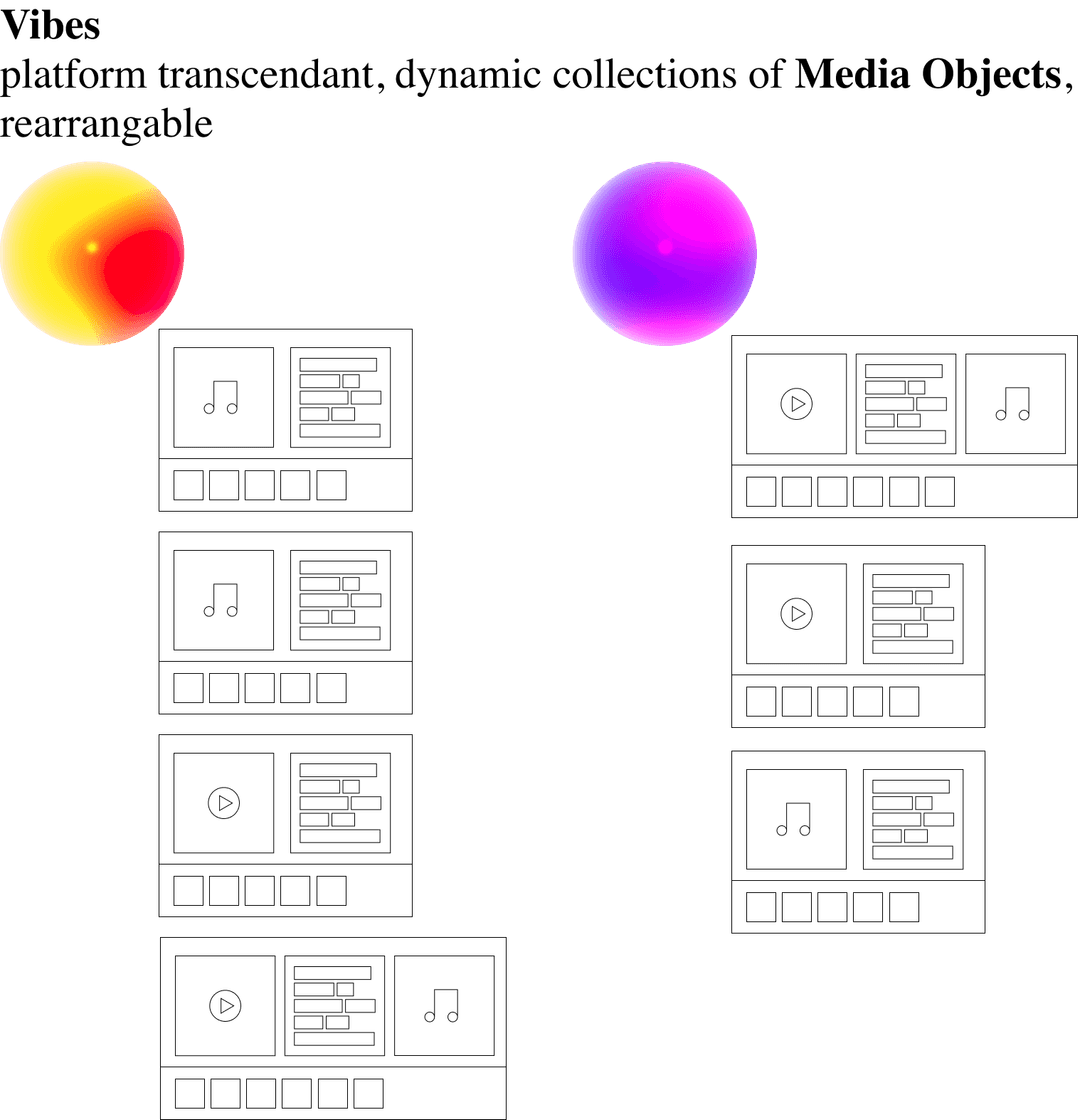

Vibes now arrive as the restructured collections of these Media Objects. Vibes are platform transcendent. All forms of internet content—Tik Tok videos, Pintrest boards, Spotify playlists, etc.—are assimilated into the universtal interface The Vibe defines.

Vibes play a dual role in computer applications: a) as a UI object for users to consume, curate, and share media, and b) as a technical standard for developers to connect users' media with AI.

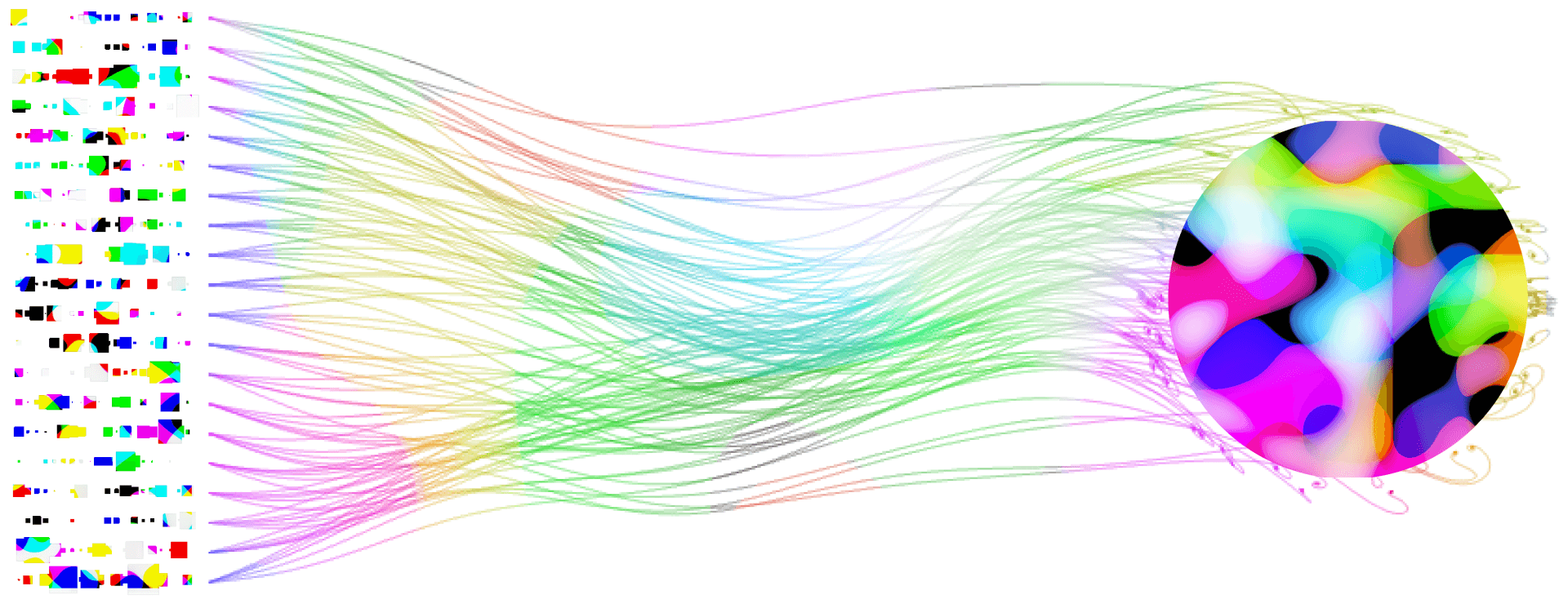

Vibes have two modalities for interacting with AI models: push and pull. Vibes can push their Media Objects to a model for analysis, and they can pull new Media Objects from a model to add to their contents, in similar fashion to a recommendation algorithm filling a content feed. In a Vibe-based Computing ecosystem, users can take any Vibe they own and upload it to an app that interfaces with AI to understand and transform its contents.

A basic example of an app built on top of Vibes is an image studio that, given a selected Vibe, applies techniques like LoRA to extract the underlying style of the images in its Media Objects. This would let users generate and remix images in different aesthetics depending on the Vibe that is plugged into the app. And with the development of multimodal models, over time AI will be able to infer implicit properties like style and mood in a universal interface over all forms of media, unlocking the ability to apply any of your Vibes to this kind of function.

As we apply Vibes to more complex arrangements of models, use cases emerge beyond simple content creation. For example, imagine a series of generative models, each fed the same Vibe as an input, where the first model produces a color scheme, the next a typography pairing, and so on for all of the components of a design system. This constitutes an AI-powered theming engine that a user composes a Vibe with to generate the design for a personalized web page.

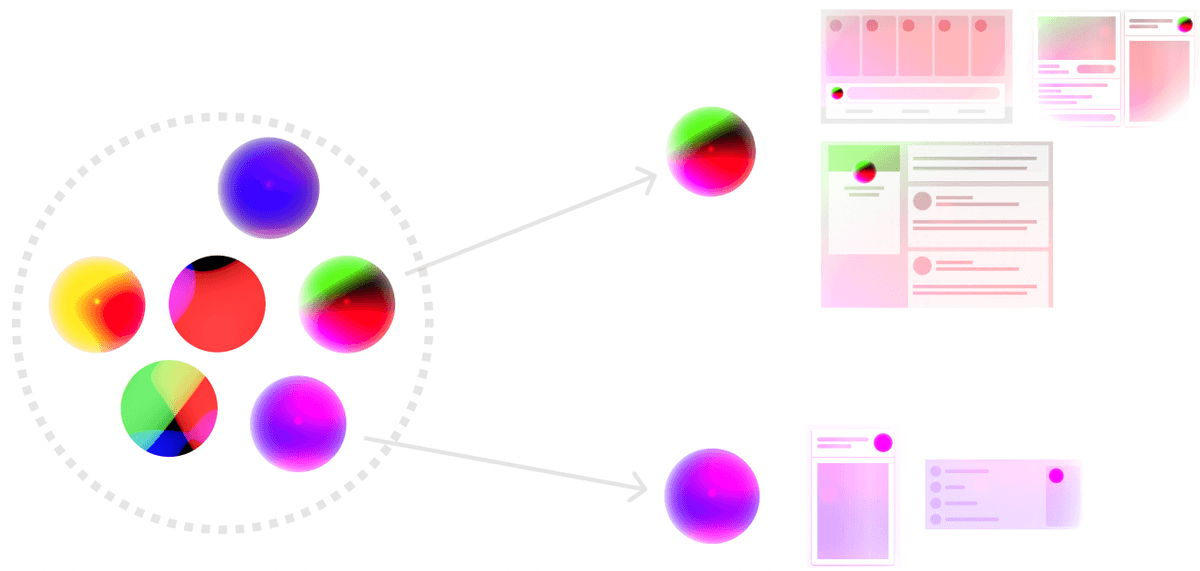

Vibes are objects that “think fast and slow”. When a Vibe is created, generative models are employed to define content-specific meta-properties on each of its Media Objects, creating a semantic understanding of the content that can be harnessed by app developers. But then as a user interacts with a Vibe, bespoke models and heuristic algorithms can be used to also write more context-specific meta-properties to it. That contextual understanding can be used to change both the function of the Vibe in an app UX, and the form of the Vibe as new Media Objects are pulled into it.

Generative UI

Now that The Vibe has been formally defined, we can begin to consider its implications for Human-Computer Interaction. For example, when applied to UI/UX, Vibes operate as a powerful new organizing principle for software applications that can be explored in a variety of domains.

Vibe-powered Creation

When you hire a designer or artist to make something for you, you don't have to manually specify every detail of what you want. Instead, you give them different references that gesture at the “vibe” of what it should look like, and then they use their own creativity to map that to a completed work. By composing different generative models together under the Vibe interface, developers can enable that same kind of experience but at software scale.

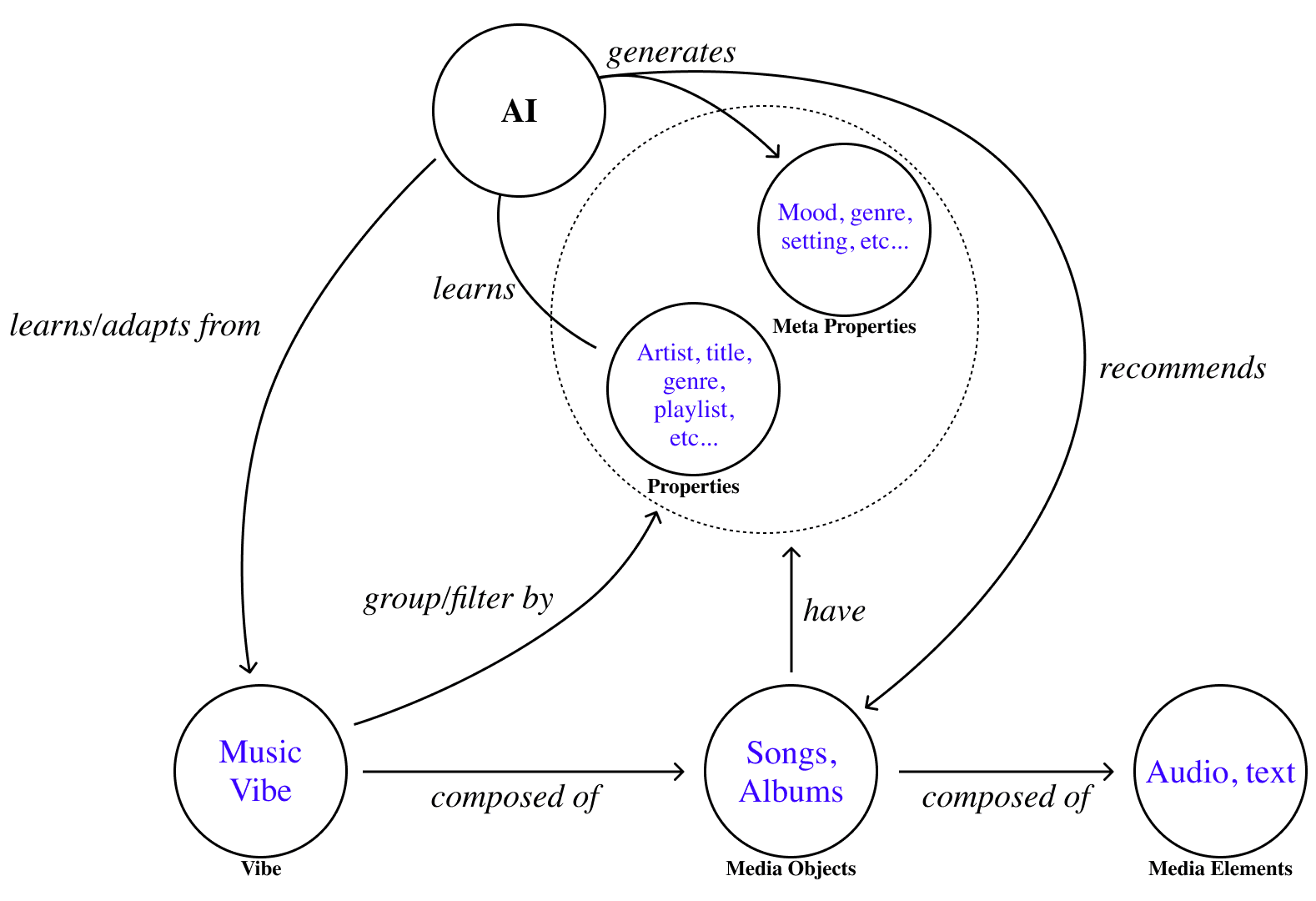

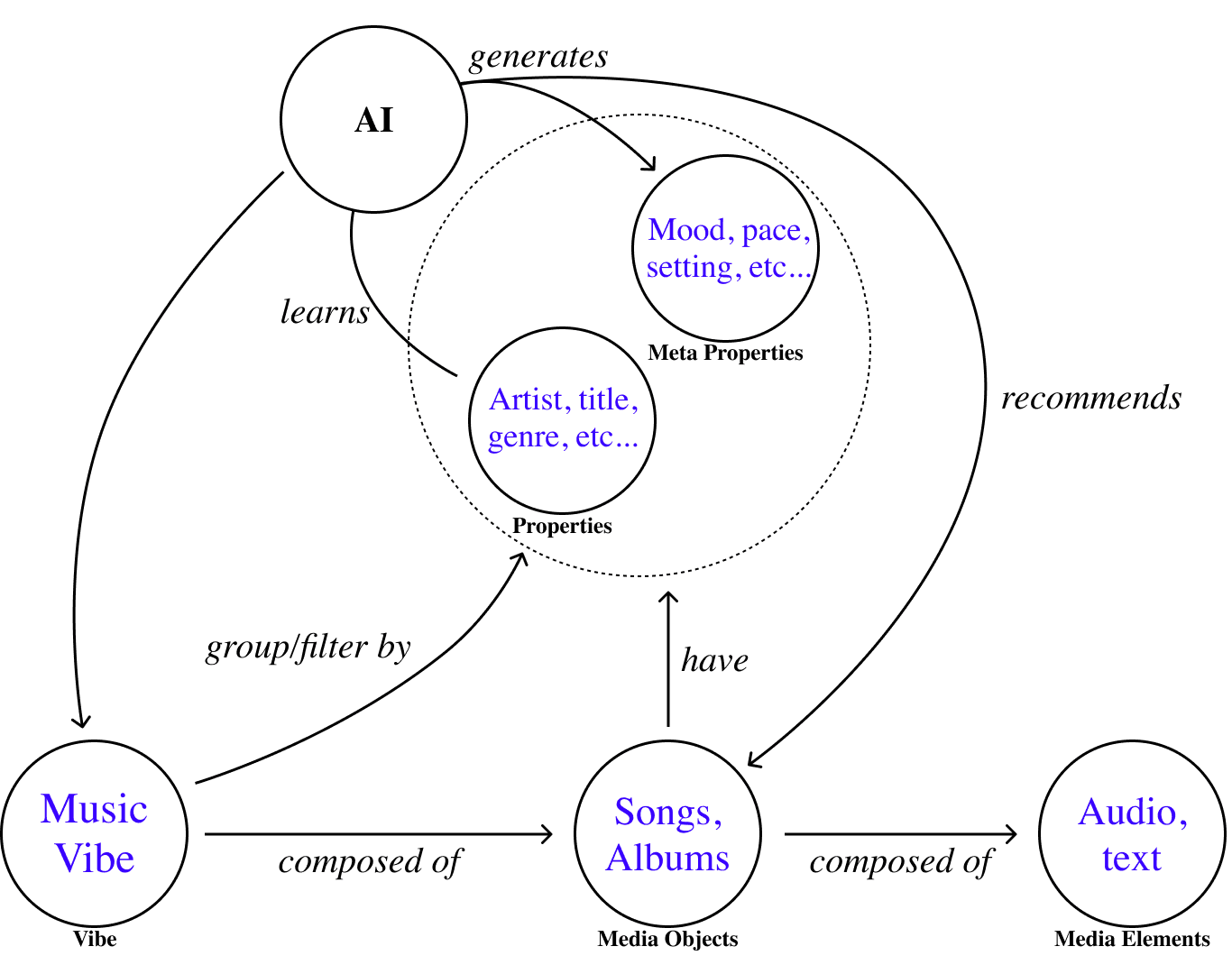

Generating a Music Vibe

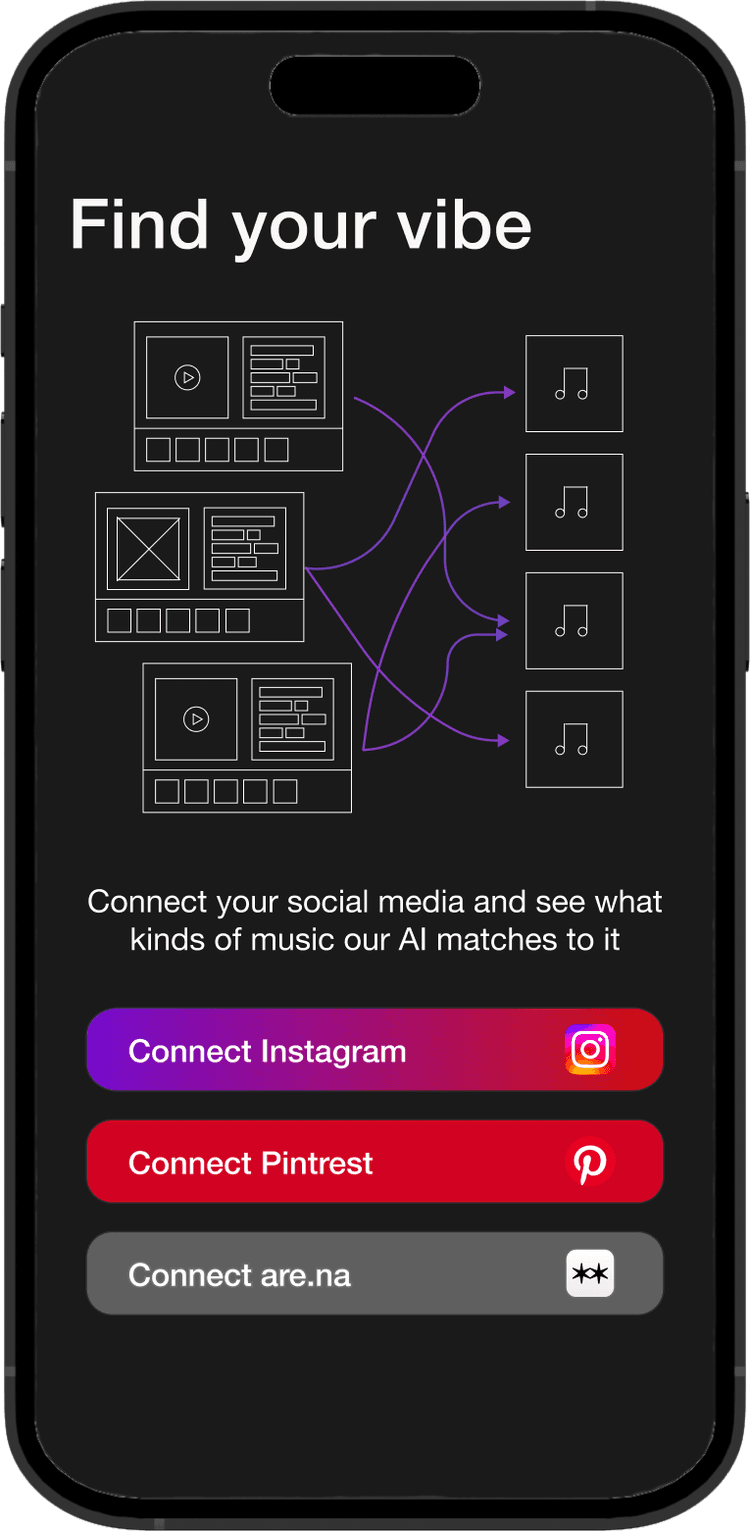

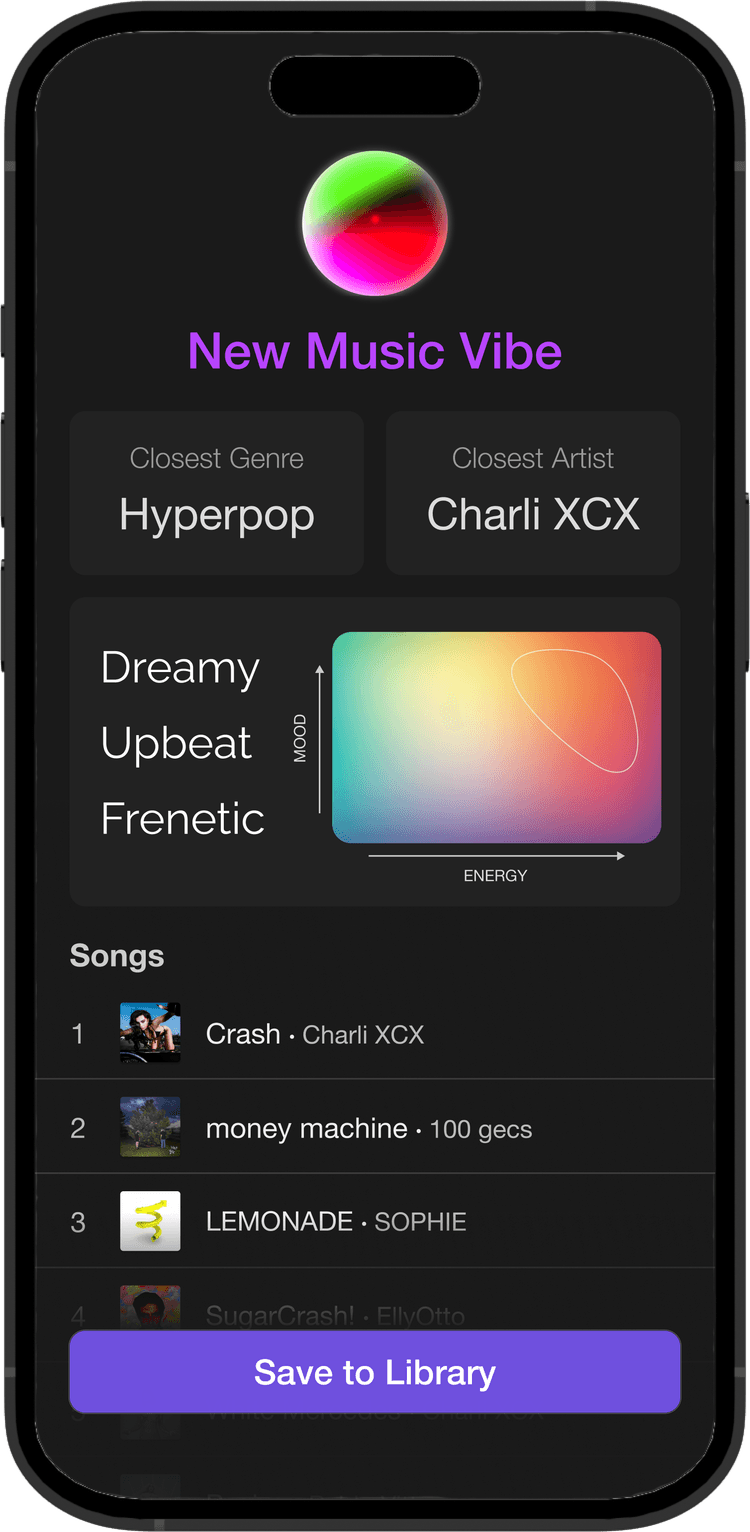

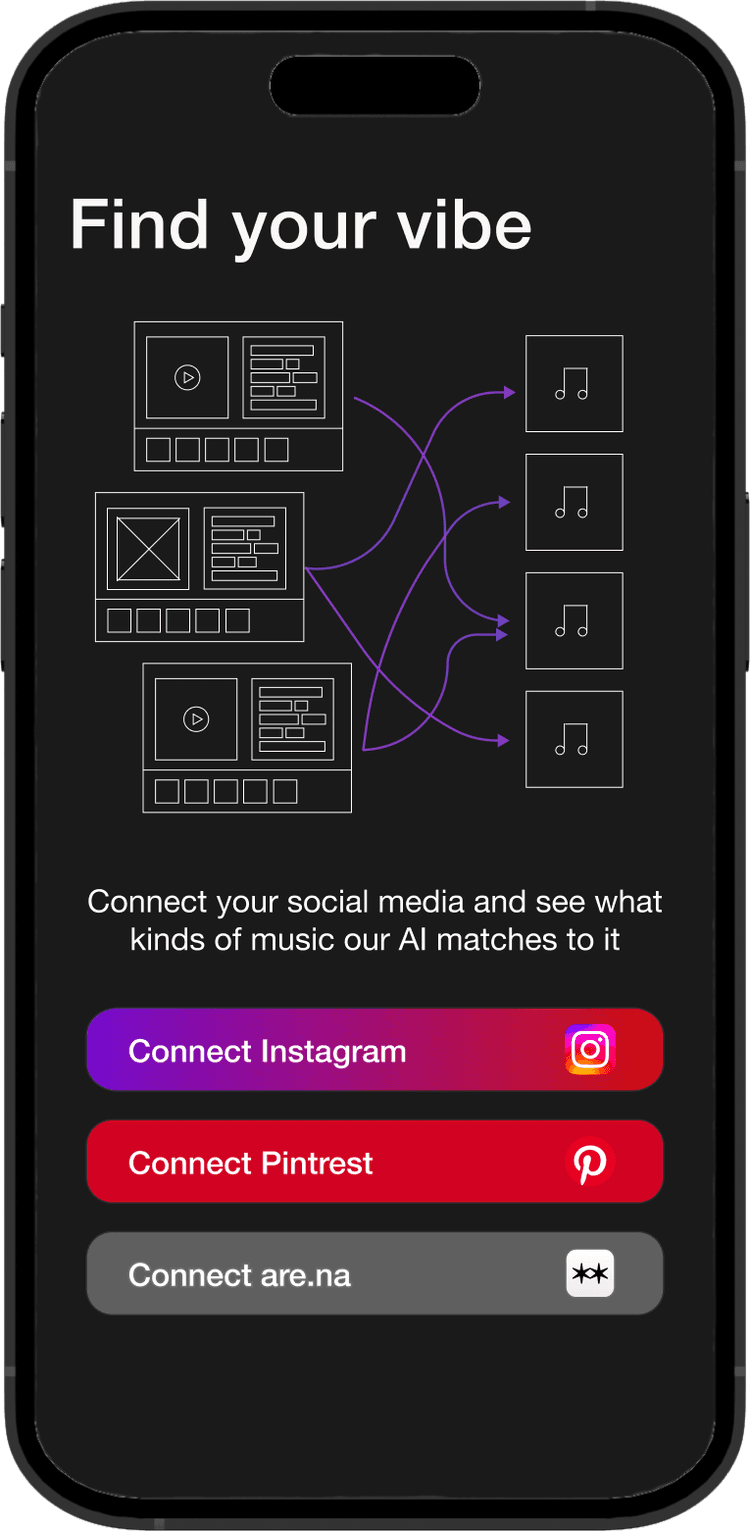

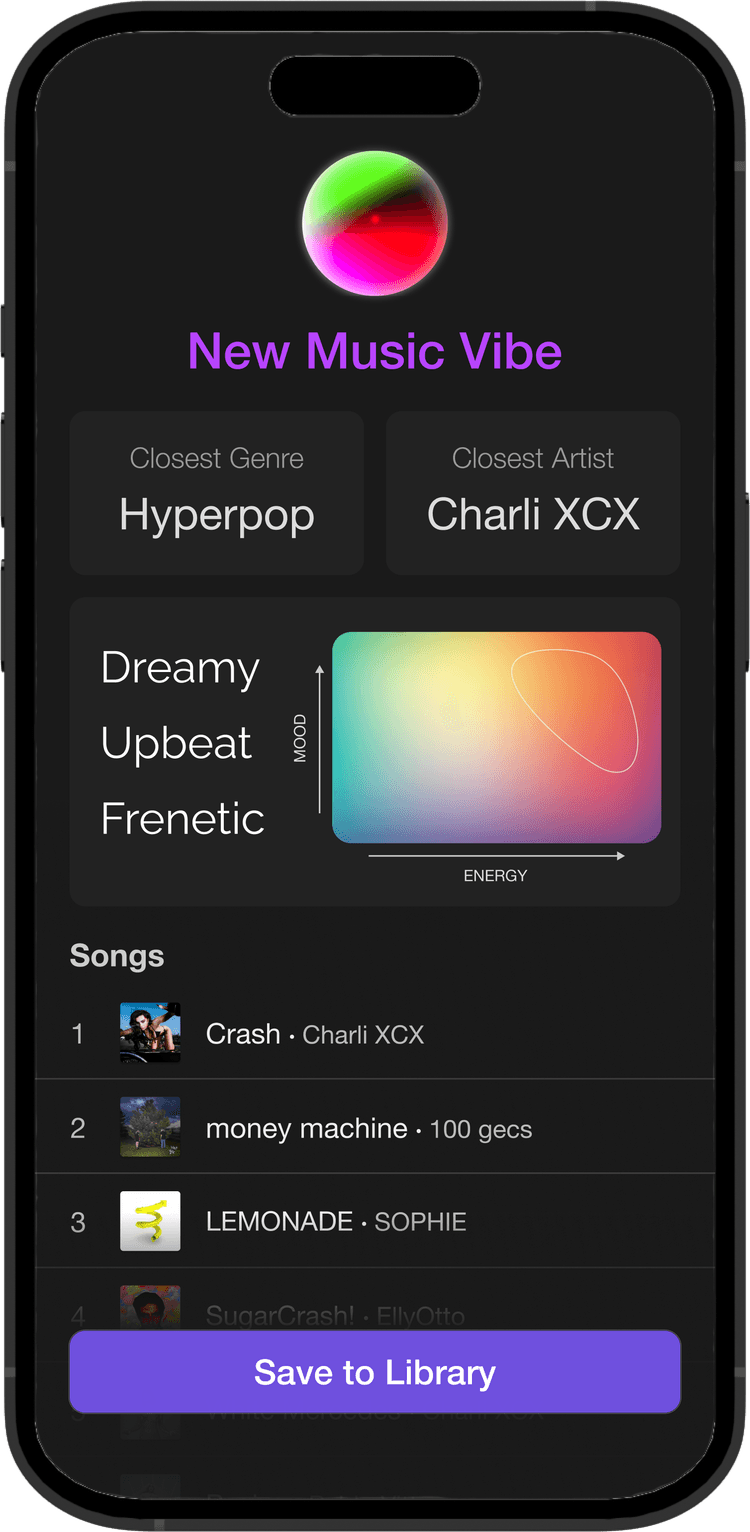

For example, imagine an app that creates a personalized playlist for a user based off a social media account that they connect. This could be implemented by first taking the Media Objects from the connected account and pushing them to an AI model that learns an understanding of their content, and then mapping to a music model that produces a list of related song Media Objects, which then get pulled into a new Vibe and presented to the user.

A Music Vibe UI

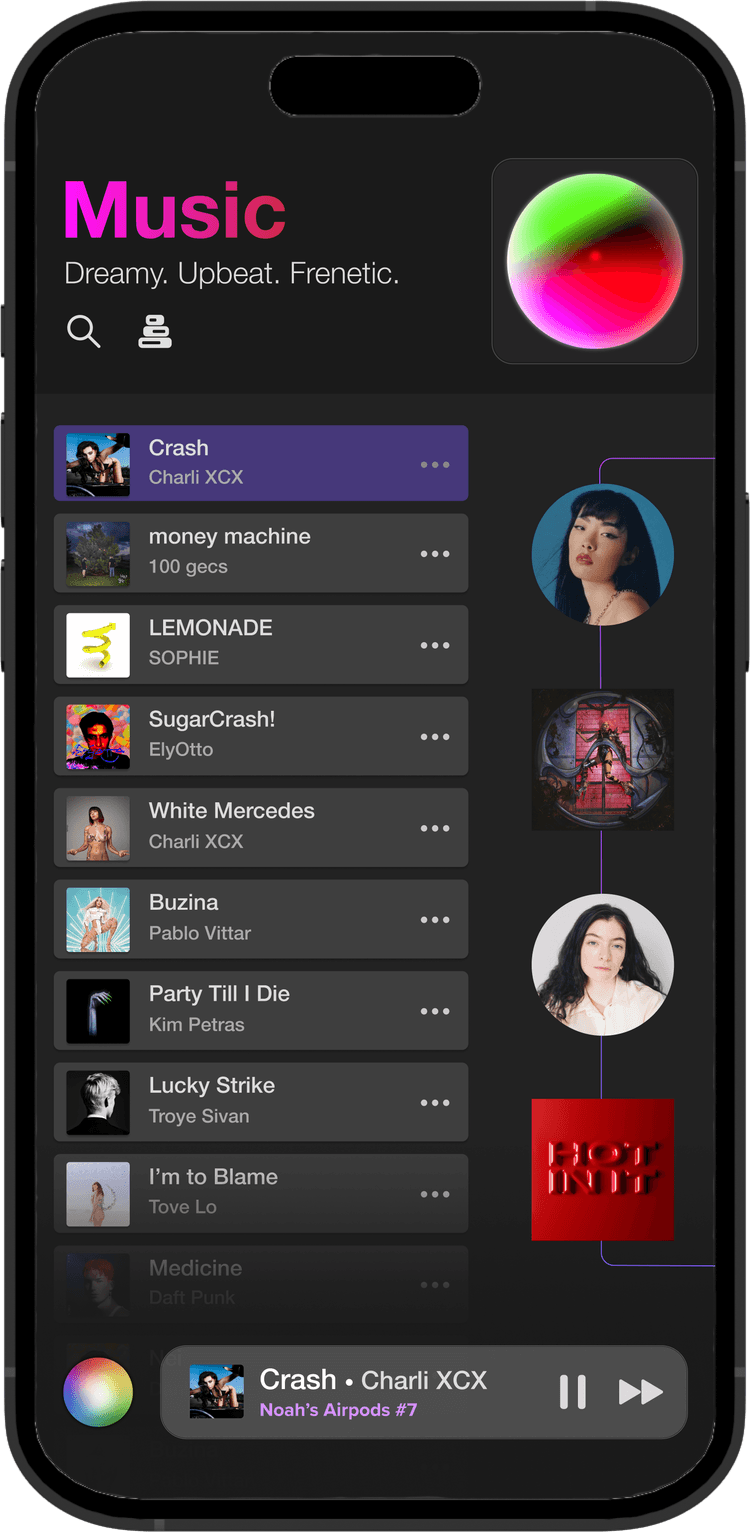

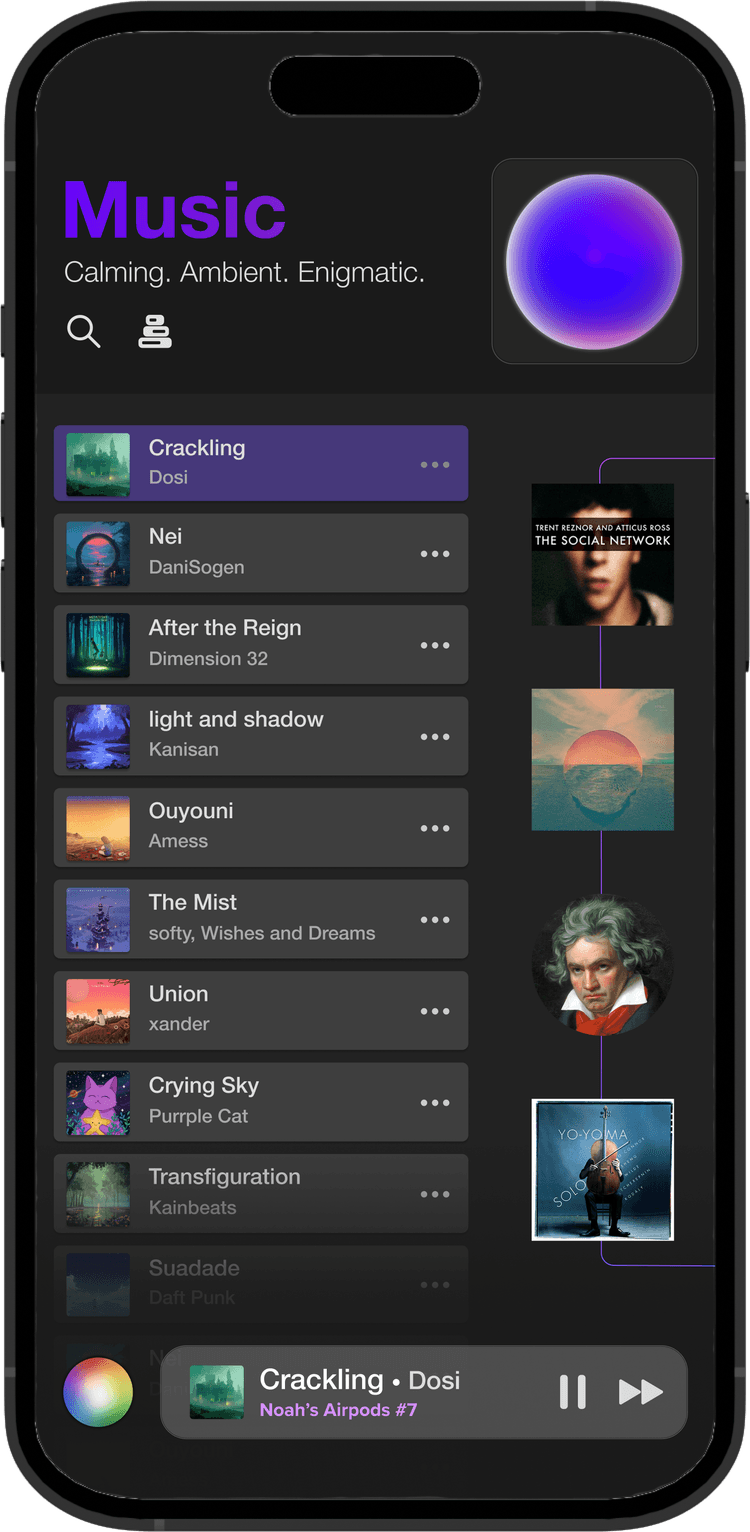

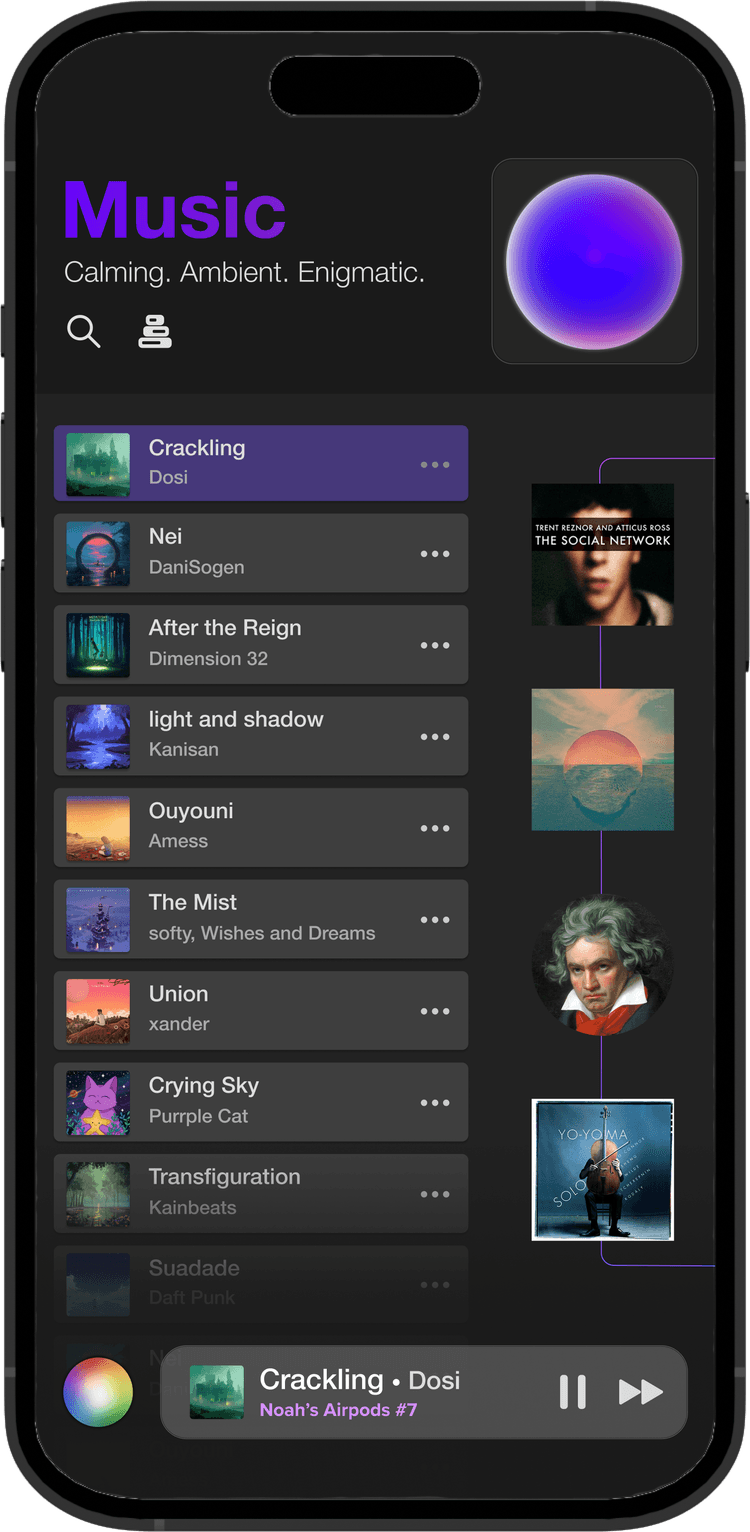

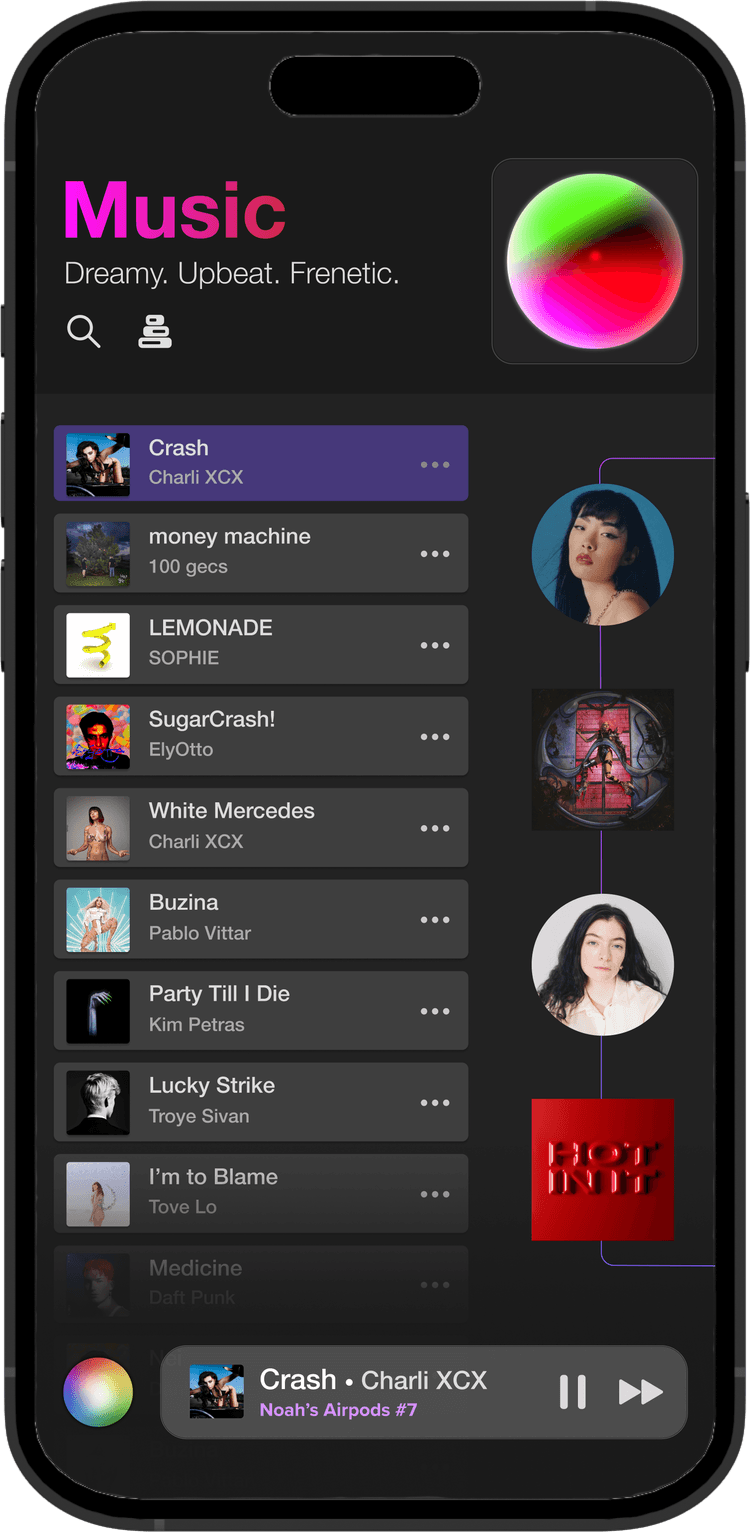

In a Vibe-powered music app, the top level interface moves from the library of playlists to the queue of songs. Music Vibes at first glance look similar to the "Radio" feature on Spotify, able to create an algorithmically generated list of songs derived from a selected playlist/artist/album—but unlike a radio station the Vibe is a living object that can change form in response to its owner.

A Tamagotchi for Music

The Music Vibe evolves continuously with user interaction. If the user skips through tracks from a certain artist, the Vibe will exclude that artist from future recommendations. If they are playing the same couple of tracks on repeat, the Vibe may stop pulling new objects altogether and just loop those songs on repeat. Every new Vibe is brought to life in this co-creative process between user and AI.

Generating a Music Vibe

Vibe-based Computing enables a creative model for apps that mirrors the experience of working with a human professional. When you hire a designer or artist to make something for you, you don't have to manually specify every detail of what you want. Instead, you give them different references that gesture at the “vibe” of what it should look like, and then they use their own creativity to map that to a completed work. By composing different generative models together under the Vibe interface, developers can enable that same kind of experience but at software scale.

Consider, for example, the concept of an app that creates a personalized playlist for a user based off a social media account that they connect. This functionality could be implemented by first taking the Media Objects from the connected account and pushing them to an AI model that learns an understanding of their content, and then mapping that understanding to a music model that produces a list of related song Media Objects. From there, the generated list can be pulled into a new Vibe and presented to the user. This app would let anyone see what kinds of songs matched their Instagram, a task that would require a human curator today.

A Music Vibe UI

In a Vibe-powered music app, the top level interface moves from the library of playlists to the queue of songs. Music Vibes at first glance look similar to the "Radio" feature on Spotify, able to create an algorithmically generated list of songs derived from a selected playlist/artist/album—but unlike a radio station the Vibe is a living object that can change form in response to its owner.

The Music Vibe evolves its song queue continuously with user interaction. If the user skips through tracks from a certain artist, the Vibe will exclude that artist from future recommendations. If they are playing the same couple of tracks on repeat, the Vibe may stop pulling new song objects altogether and just loop those songs on repeat. The UI surfaces different recommended artists, albums, and songs in a widget to the right of the queue, which operates like a sushi conveyor belt, letting the user pick objects off it and drag them into the Vibe to shape its content. The recommendations the user selects in turn inform the new song Media Objects that get pulled into the end of the queue.

Tangible Algorithms

Across every platform on the modern web, algorithms are constantly working to predict and influence our behavior, nudging us towards addiction, emotional activation, and passive consumption. And at the same time, platforms hide the workings of these algorithms away from us in order to more easily divert our will towards profit-maximizing ends.

Vibe-based Computing seeks to put the user back in the driver's seat. The Vibe as metaphor and UI object decomposes the opaque uni-algorithm of the platform into a collection of friendly, intuitive objects owned by the user. Algorithms become agentifying, providing power for users to direct their own experience.

Take the previous example of a music app built on top of Vibes. Users can split their music listening between many of these Vibes: one Vibe for lo fi study music and one for 2000s hip hop, one for Friday night pregames and another for Saturday morning hangovers. The user is placed in control of the algorithm and can differentiate the ways they want to access their content.

If a Vibe’s recommendations become stale the user can reset its parameters or discard the Vibe altogether to start fresh. And like all Vibes owned by the user, the created Music Vibes are natively shareable with other people, letting people borrow and swap algorithms with each other.

Towards a Liberatory Internet

All revolutions in computing rely on new organizing metaphors to make their capabilities accessible to a nontechnical audience: x86 had the desktop, the internet had the browser, smartphones had the app. Vibes represent the next stage in that evolution for the era of AI-computing. In a world of monopoly platforms and surveillance capitalism, Vibes promise to return the web to a model of truly personal computing.

In this new internet, users shape the emergent direction of the Vibes they interact with, adding and removing content, merging Vibes, seeding new Vibes, connecting with others.

Vibes layer on top of the ordered, simplified representations of other people as a collection of ideas and labels that characterize the modern internet. They provide a glimpse of the full multiplicity of being behind each person’s otherwise constrained and channeled online persona. All of this comes together to create a a different kind of web, a media ecology where creativity is owned by the user.

...a new way of being, online.

Do I contradict myself?

Very well then I contradict myself,

(I am large, I contain multitudes.)

Song of Myself 51

Current State of Development

To date most of my work on Vibe-based Computing has been at the conceptual level in trying to explore the possiblities of the Vibe inteface from an Human-Computer Interaction lens. While there is plenty of work left to do there, the next phase of the project I have been working on lately is creating a working prototype of a Vibe-based Computing system that can demonstrate a proof of concept for the idea, and serve as a foundation for a fully developed framework to be released in the future.

To this end, there are 2 major research areas I have been pursuing:

1) Multi-modal generative models To fully implement the Vibe as described in the above write-up, we will need a general media AI architecture than can create a mathematical semantic understanding of any piece of media, and be able to map that understanding to other models.

The rise of multimodal LLMs, as well as the innovation made with the RAG (Retrieval Augmented Generation) design, enable such an architecture to exist in theory. However, how to implement this architecture is still very much an open question, as well how effective the current state of the art AI models will be at creating a deep semantic understanding of any arbitrary piece of media fed into it.

Given the rapid acceleration of the field I expect many of these problems to have clear solutions developed over the next year or two. But devising a system that can piece them together in the right way will be a technical challenge that needs to be engaged with.

2) Privacy and data model Another key part of Vibe-based Computing is creating a new data framework for online media that gives users complete control over their data. Cryptographic identity is the obvious starting point for building this system, with a Public/Private key generated for each user. However, this still leaves the questions of:

a) Where will a user's data and media be stored (Local to the user's device? In a cloud service operated by a coroporation?)

b) How will communication between users work in a privacy-perserving way?

c) How can the data framework be designed to have minimal, if not 0, impact on the user-facing experience?

All of these problems have been well researched in fields like cryptography, networking, and cryptocurrency design, but Vibe-based Computing introduces new challenges that will require a novel system design.

Attribution

The concept of Vibe-based Computing is original to me, as are the UI designs depicted under the "Generating a Music Vibe" and "A Music Vibe UI" sections.

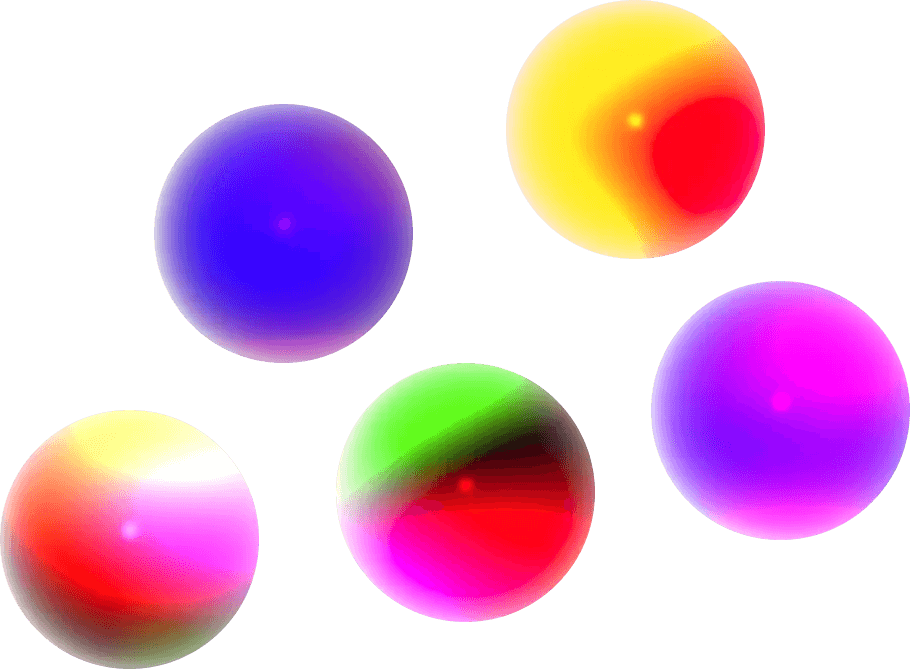

The graphics and Vibe gif shown interspered throughout this portfolio page were made in collaboration between me and outside designers I hired to better illustrate the different components of the concept I formulated. Further details available upon request.